Welcome to Reality~Trivia

june 2024

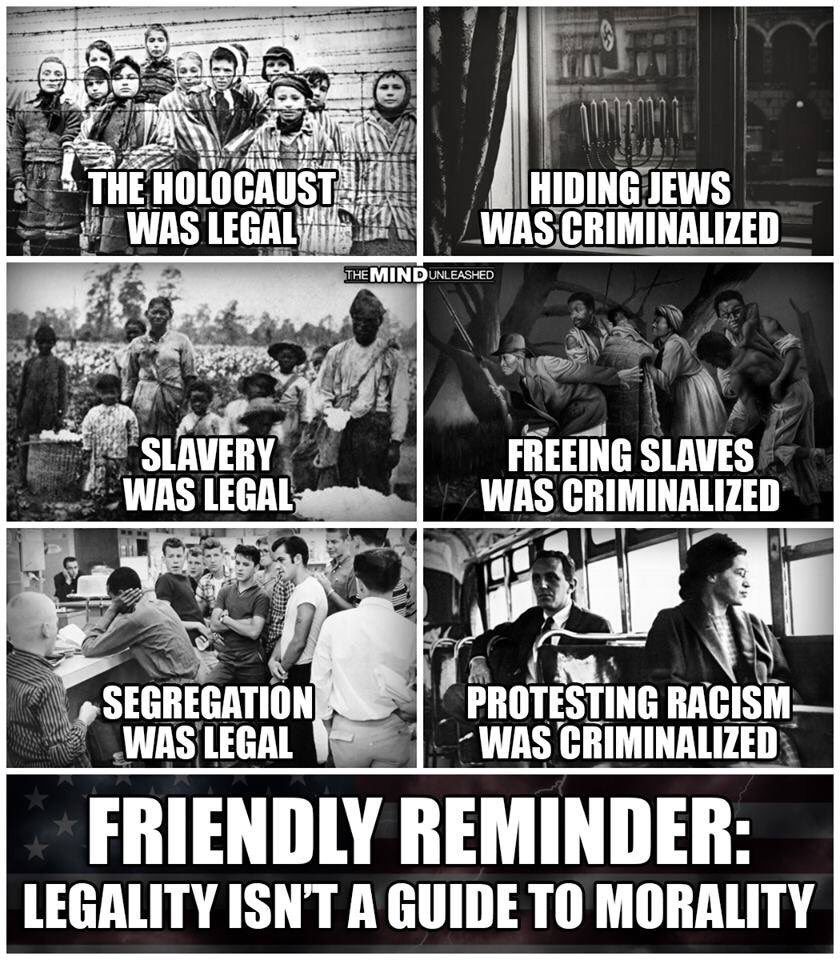

“Civil disobedience is not our problem. Our problem is civil obedience. Our problem is that people all over the world have obeyed the dictates of leaders…and millions have been killed because of this obedience…Our problem is that people are obedient allover the world in the face of poverty and starvation and stupidity, and war, and cruelty. Our problem is that people are obedient while the jails are full of petty thieves… (and) the grand thieves are running the country. That’s our problem.”

― Howard Zinn

-------------------------------------------------------

the apocalypse of settler colonialism

by dr. gerald horne

"through the centuries, the Republic that eventuated in North America has maintained a maximum of chutzpah and a minimum of self-awareness in forging a creation myth that sees slavery and dispossession not as foundational but inimical to the founding of the nation now know as the United States. But, of course, to confront the ugly reality would induce a persistent sleeplessness interrupted by haunted drems, so thus this unsteadiness has prevailed."

Oliver Stone & Peter Kuznick : The Untold History of the United States

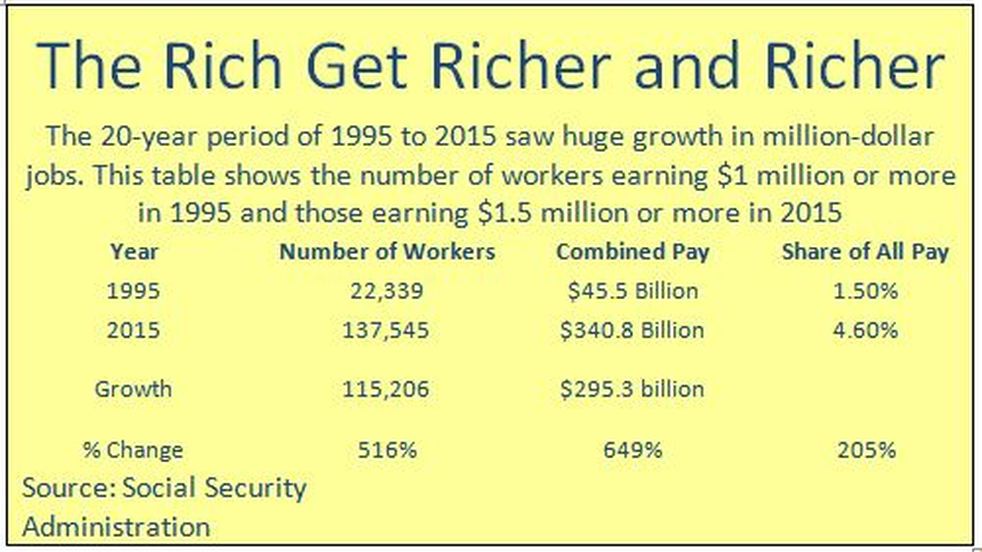

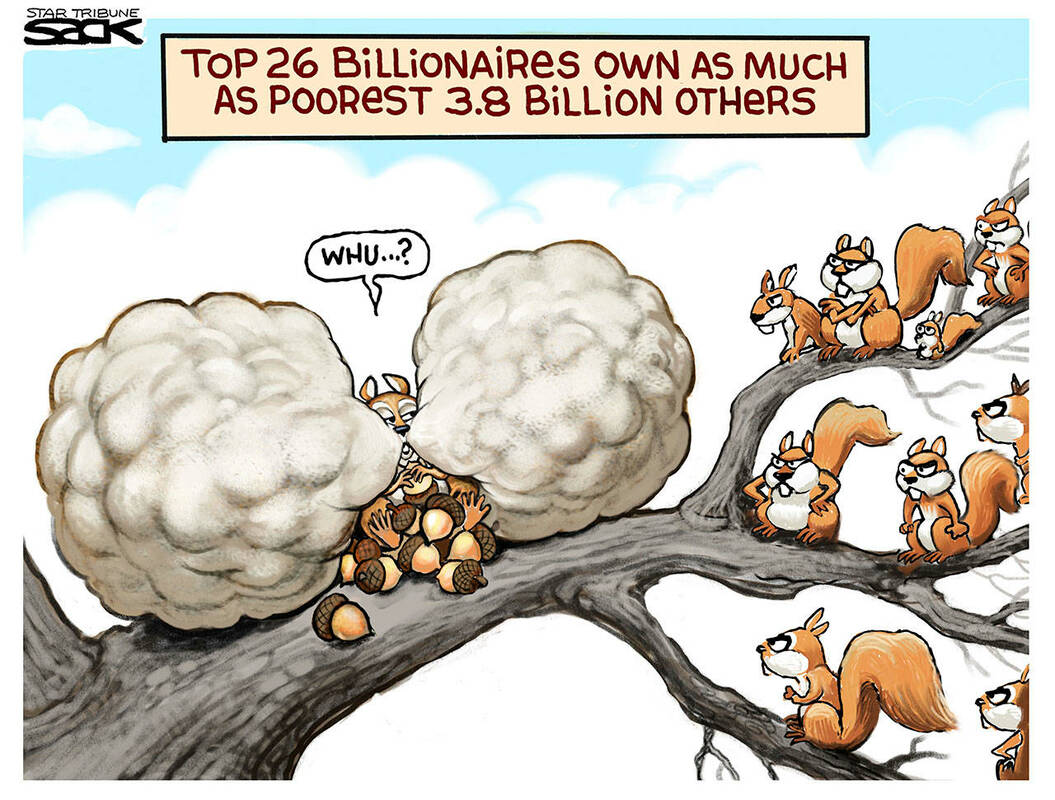

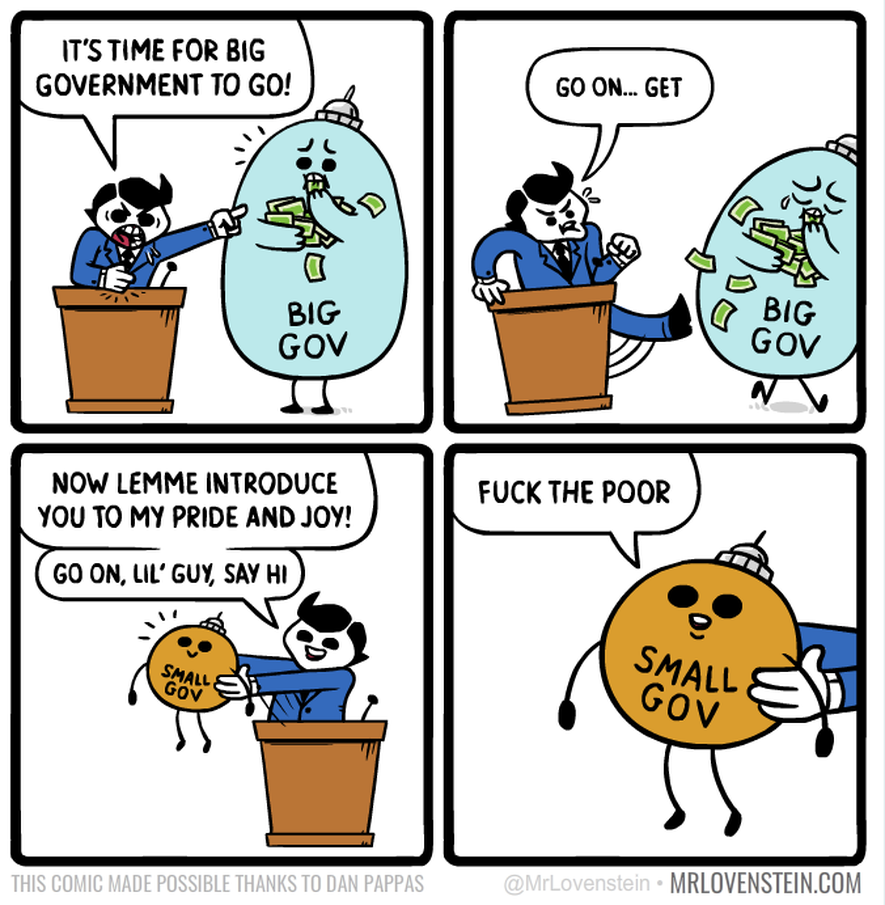

...Why do such a tiny number of people - whether the figure is currently 300 or 500 or 2,000 - control more wealth than the world's poorest 3 billion? Why are a tiny minority of wealthy Americans allowed to exert so much control over U.S. domestic politics, foreign policy, and media while the great masses see a diminution of their real power and standards of living? Why have Americans submitted to levels of surveillance, government intrusion, abuse of civil liberties, and loss of privacy that would have appalled the Founding Fathers and earlier generations? Why does the United States have a lower percentage of unionized workers than any other advanced industrial democracy? Why, in our country, are those who are driven by personal greed and narrow self-interest empowered over those who extol social values like kindness, generosity, compassion, sharing, empathy, and community building? And why has it become so hard for the great majority of Americans to imagine a different, we would say a better, future than the one defined by current policy initiatives and social values....

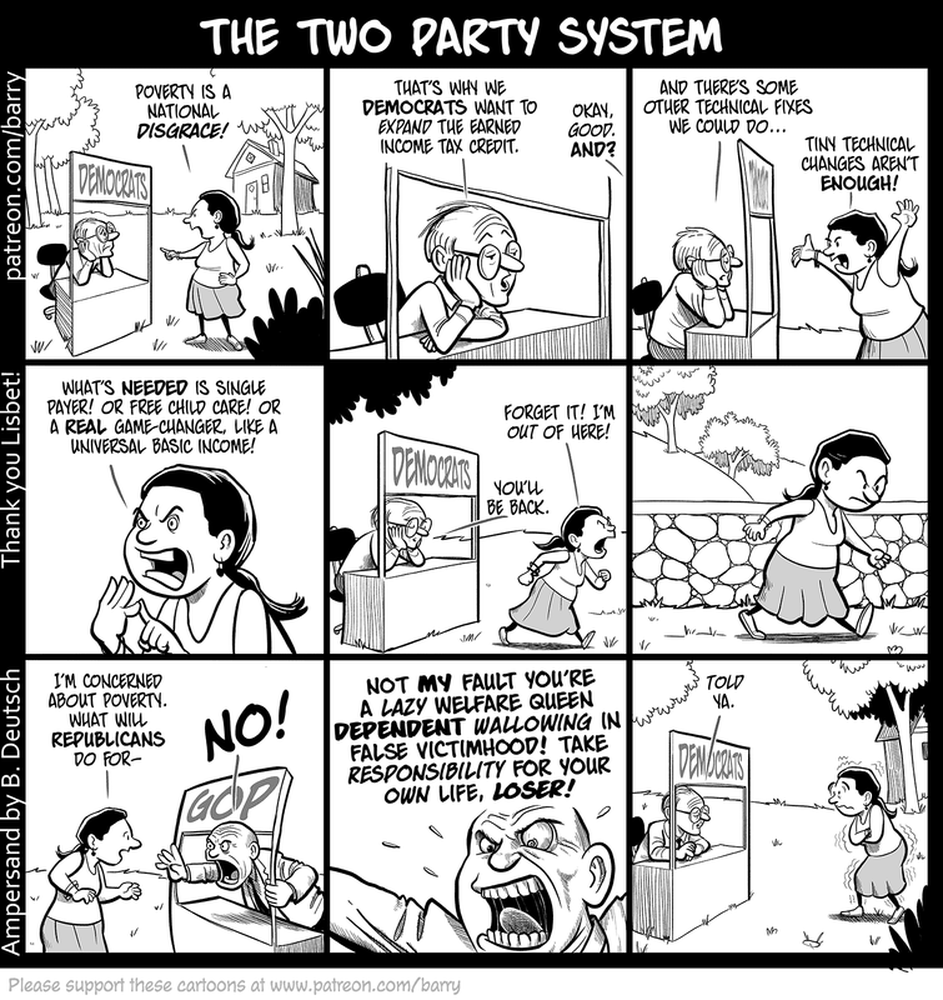

the two party system

ANCIENT ROME STILL DEFINES US POLITICS OF WAR AND POVERTY

BY JACQUELINE MARCUS, TRUTHOUT

To see that our political system has not evolved from the days of Rome, morally, ethically, legally, environmentally, culturally; that we still live in an age of wars; that our political policies still operate under an archaic system that creates dire poverty and desolation for the masses of people -- a system that exploits resources with no regard for the pollution it creates in order to benefit the few; that this political system has prevailed over the course of 2,000 years or more is quite stunning, indeed, embarrassing, when you think about it.

articles

ROBERT REICH DEBUNKS THE MYTH THAT 'THE RICH DESERVE TO BE RICH'

(ARTICLE BELOW)

SHATTERING DECEPTIVE MIRRORS: YOUNGER GENERATIONS HAVE THE CHANCE TO BUCK THE BEAUTY INDUSTRY SCAM

(ARTICLE BELOW)

BOTTLED WATER CONTAINS HUNDREDS OF THOUSANDS OF PLASTIC BITS: STUDY

(ARTICLE BELOW)

MEDICARE ADVANTAGE PLANS: THE HIDDEN DANGERS AND THREATS TO PATIENT CARE

(ARTICLE BELOW)

A NEW STUDY DESCRIBES IN GROTESQUE DETAIL THE EXTENT TO WHICH THE ULTRARICH HAVE PERVERTED THE CHARITABLE GIVING INDUSTRY.

(ARTICLE BELOW)

HOW TRUMP AND BUSH TAX CUTS FOR BILLIONAIRES BROKE AMERICA

(ARTICLE BELOW)

FROM 1947 TO 2023: RETRACING THE COMPLEX, TRAGIC ISRAELI-PALESTINIAN CONFLICT

(ARTICLE BELOW)

RED STATE CONSERVATIVES ARE DYING THANKS TO THE PEOPLE THEY VOTE FOR

REALITY(ARTICLE BELOW)

HOW TEXAS BECAME THE NEW "HOMEBASE" FOR WHITE NATIONALIST AND NEO-NAZI GROUPS

AMERICA(ARTICLE BELOW)

RED STATE CONSERVATIVES ARE DYING THANKS TO THE PEOPLE THEY VOTE FOR

(ARTICLE BELOW)

HOW THE GOP SUCKERED AMERICA ON TAX CUTS

(ARTICLE BELOW)

'MISLEADING': ALARM RAISED ABOUT MEDICARE ADVANTAGE 'SCAM'

(ARTICLE BELOW)

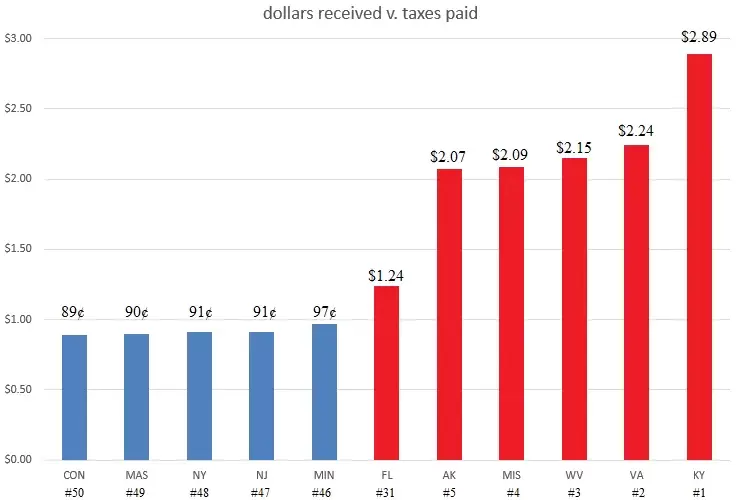

EXCERPT: WHY ARE WE LETTING THE RED STATE WELFARE OLIGARCHS MOOCH OFF BLUE STATES?

(ARTICLE BELOW)

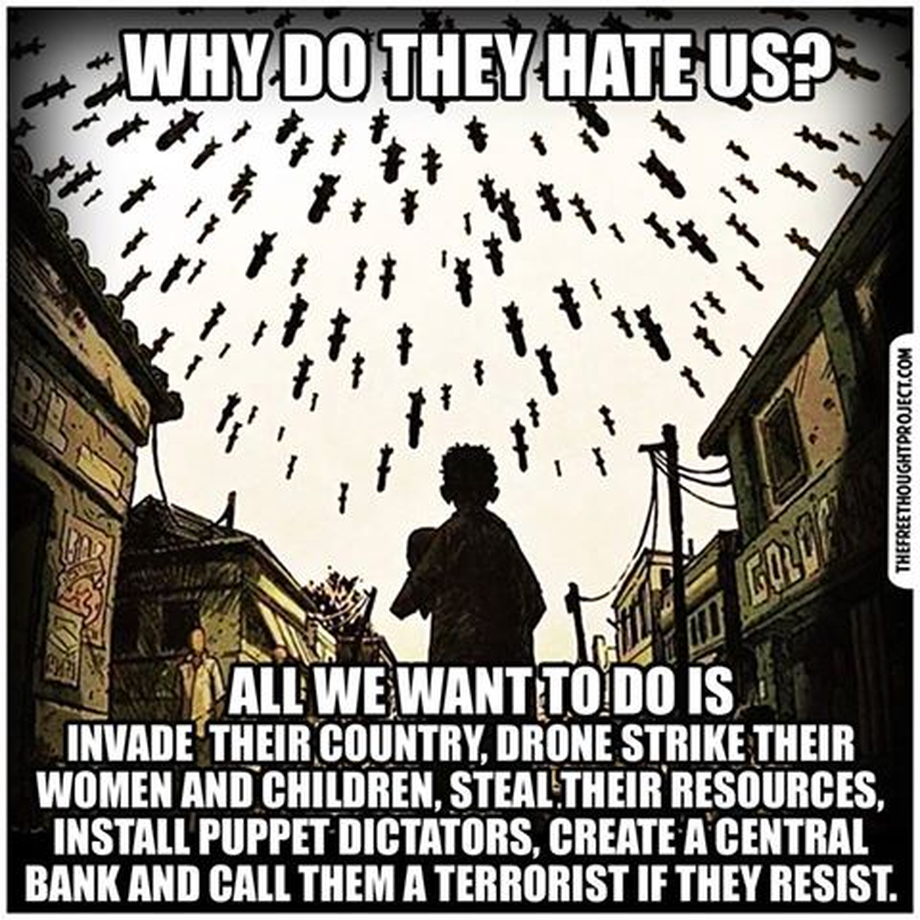

AMERICA'S "SYSTEMIC RACISM" ISN'T JUST DOMESTIC: CONSIDER WHO DIES AROUND THE WORLD IN OUR WARS(ARTICLE BELOW)

THE DEBT LIMIT IS JUST ONE OF AMERICA’S SIX WORST TRADITIONS

(ARTICLE BELOW)

*REFINED CARBS AND RED MEAT DRIVING GLOBAL RISE IN TYPE 2 DIABETES, STUDY SAYS

(ARTICLE BELOW)

*A NEW STUDY LINKS 45 HEALTH PROBLEMS TO "FREE SUGAR." HERE'S WHAT THAT MEANS, AND HOW TO AVOID IT(ARTICLE BELOW)

*REPUBLICAN POLICIES ARE KILLING AMERICANS: STUDY

(ARTICLE BELOW)

*THE CORPORATE NARRATIVE ON INFLATION IS BOGUS

(ARTICLE BELOW)

*US FOR-PROFIT HEALTH SYSTEM IS A MASS KILLER

(ARTICLE BELOW)

*REPUBLICAN COUNTIES HAVE HIGHER MORTALITY RATES THAN DEMOCRATIC ONES, STUDY FINDS

(ARTICLE BELOW)

*BOTTLED WATER GIANT BLUETRITON ADMITS CLAIMS OF RECYCLING AND SUSTAINABILITY ARE “PUFFERY”(ARTICLE BELOW)

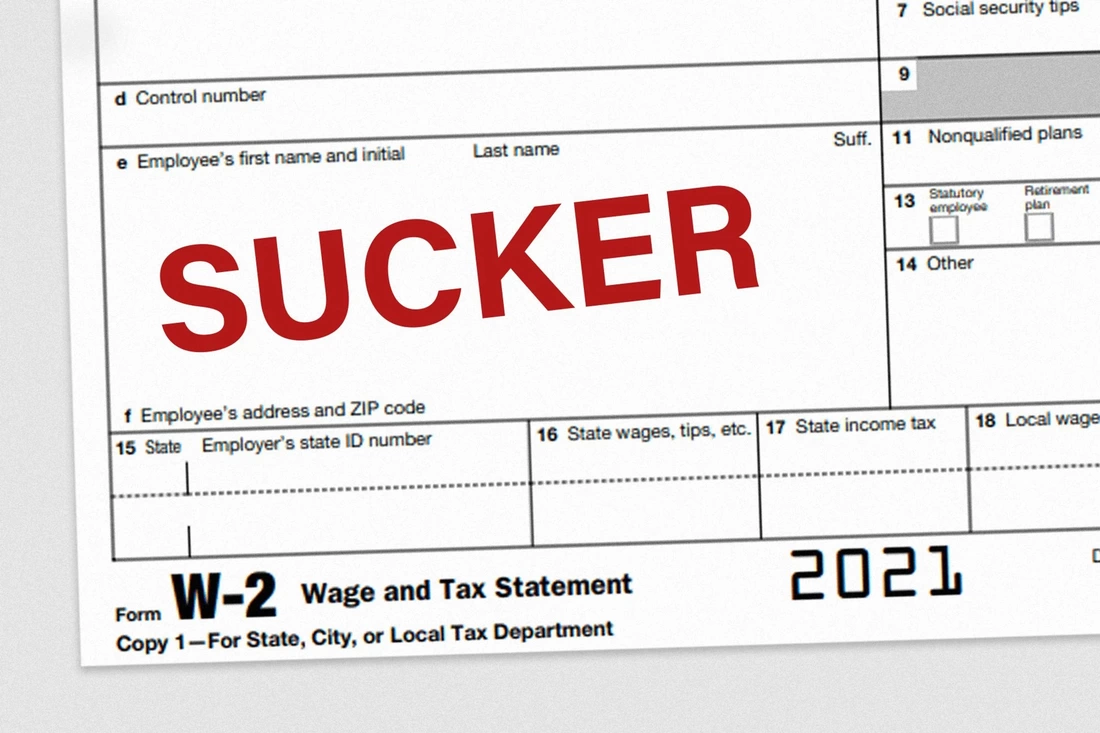

*“IF YOU’RE GETTING A W-2, YOU’RE A SUCKER”

(ARTICLE BELOW)

*POOREST US COUNTIES SUFFERED TWICE THE COVID DEATHS OF THE RICHEST

(ARTICLE BELOW)

*LIFE EXPECTANCY LOWEST IN RED STATES -- AND THE PROBLEM IS GETTING WORSE

(ARTICLE BELOW)

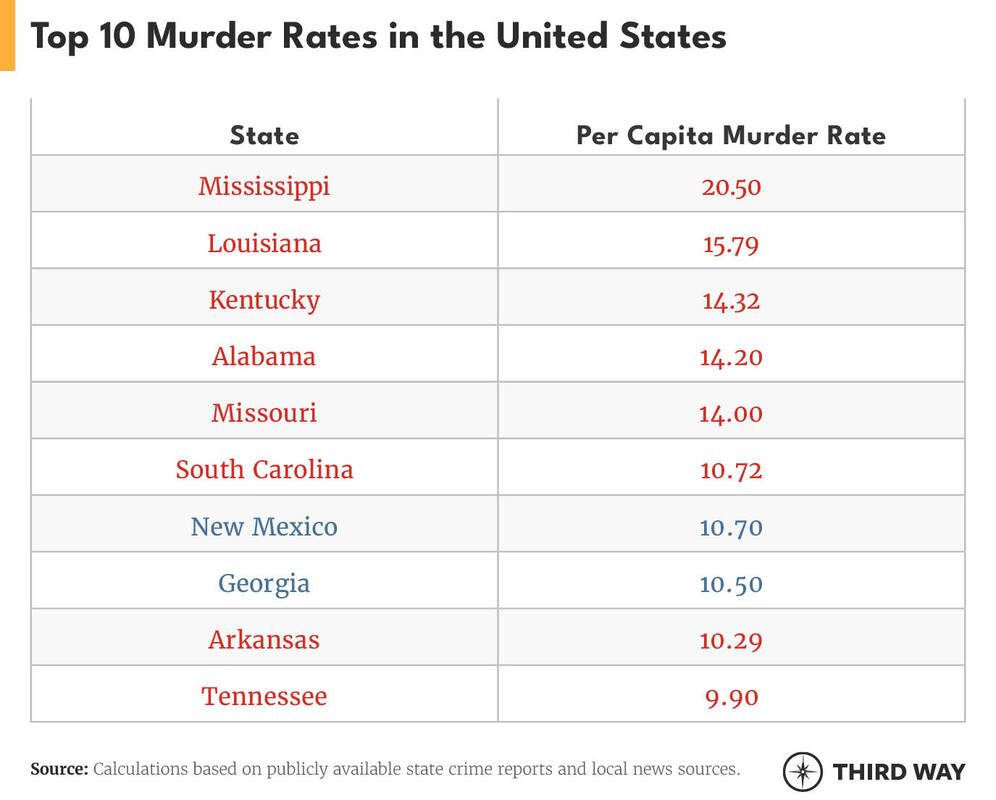

*EXCERPT: THE RED STATE MURDER PROBLEM

(ARTICLE BELOW)

*'CONFLICTED CONGRESS': KEY FINDINGS FROM INSIDER'S FIVE-MONTH INVESTIGATION INTO FEDERAL LAWMAKERS' PERSONAL FINANCES(ARTICLE BELOW)

*WE COULD VACCINATE THE WORLD 3 TIMES OVER IF THE RICH PAID THE TAXES THEY OWE

(ARTICLE BELOW)

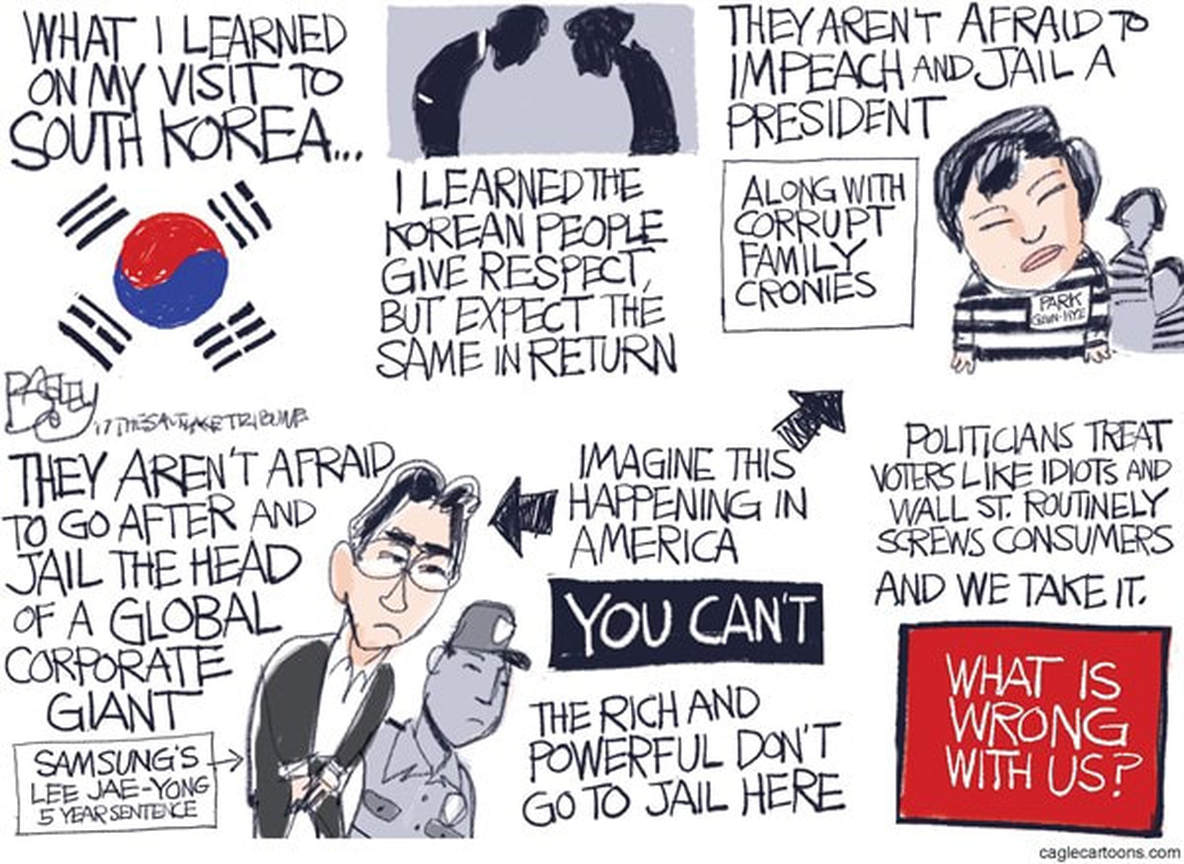

*THE SEEDY CRIMES OF THE OBSCENELY RICH ARE ROUTINELY IGNORED

(ARTICLE BELOW)

*THE 'JOB CREATORS' FANTASY IS A MALIGNANT MYTH THAT RICH USE TO SQUEEZE THE WORKING CLASS(ARTICLE BELOW)

*THE MURDER OF THE U.S. MIDDLE CLASS BEGAN 40 YEARS AGO THIS WEEK

(ARTICLE BELOW)

*BIPARTISAN INFRASTRUCTURE BILL INCLUDES $25 BILLION IN POTENTIAL NEW SUBSIDIES FOR FOSSIL FUELS(ARTICLE BELOW)

*THERE'S A STARK RED-BLUE DIVIDE WHEN IT COMES TO STATES' VACCINATION RATES

(ARTICLE BELOW)

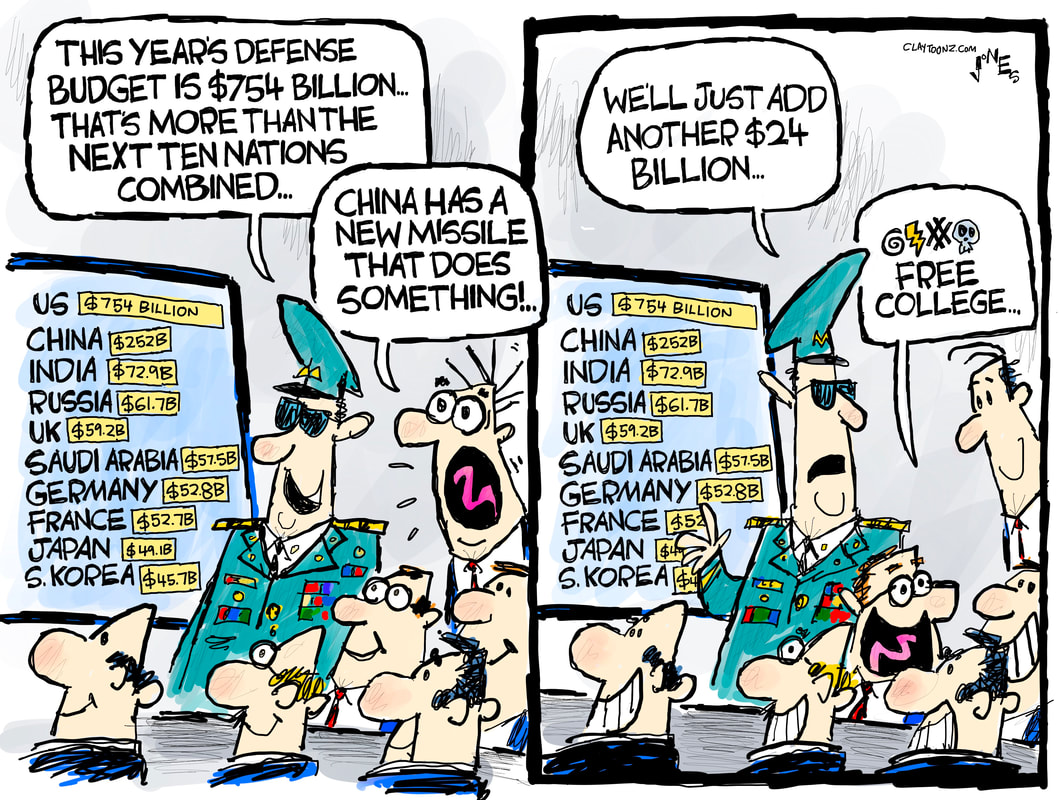

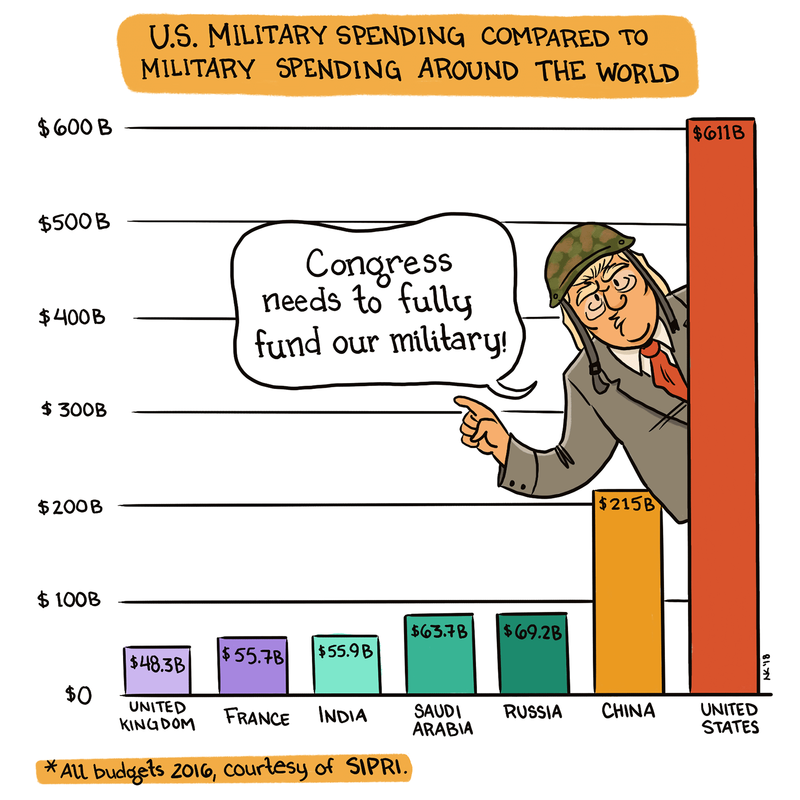

*EVEN BY PENTAGON TERMS, THIS WAS A DUD: THE DISASTROUS SAGA OF THE F-35

(ARTICLE BELOW)

*DON'T WORRY: IF YOU'RE CONCERNED ABOUT RISING FEDERAL DEBT -- READ THIS

(ARTICLE BELOW)

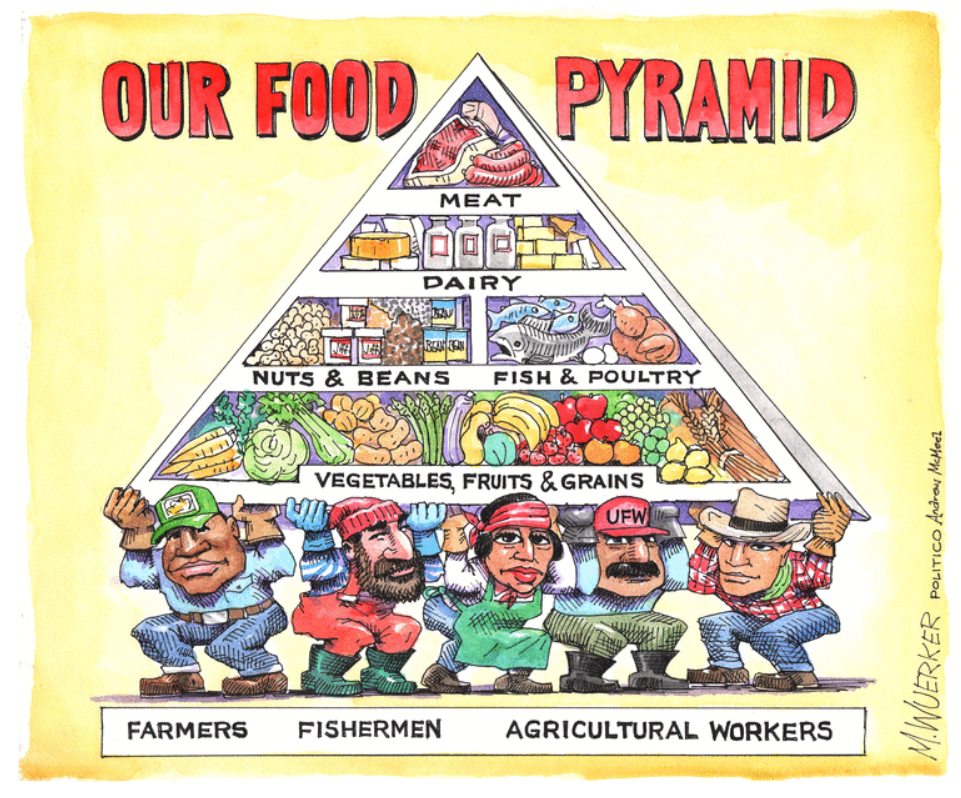

*CORPORATE CONCENTRATION IN THE US FOOD SYSTEM MAKES FOOD MORE EXPENSIVE AND LESS ACCESSIBLE FOR MANY AMERICANS(ARTICLE BELOW)

*EVEN BIDEN’S $1.9 TRILLION ISN’T NEARLY ENOUGH PANDEMIC RELIEF

(ARTICLE BELOW)

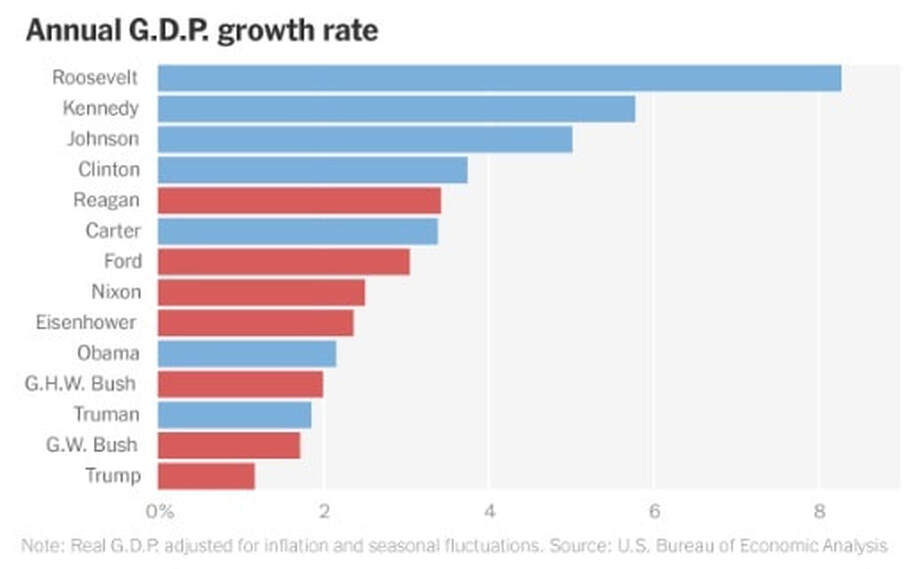

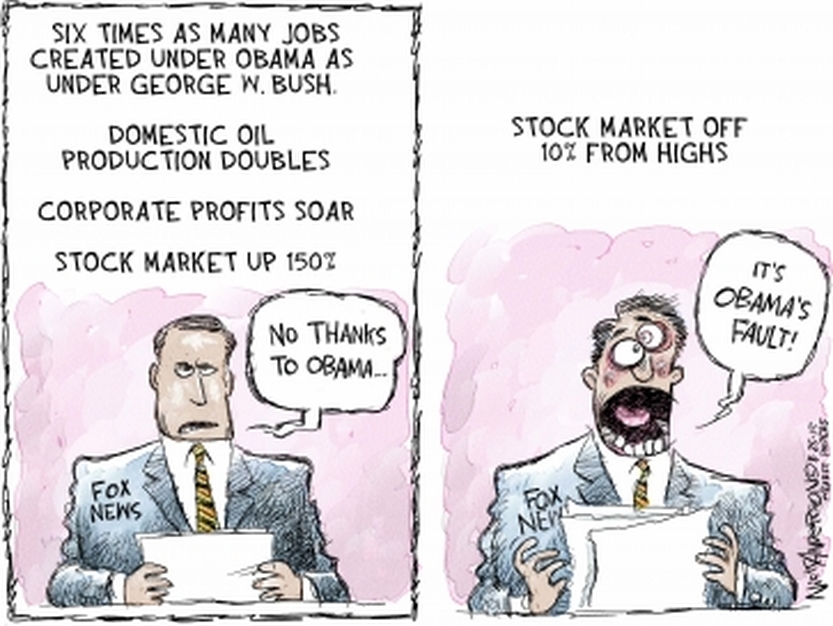

*THE ECONOMY DOES MUCH BETTER UNDER DEMOCRATS. WHY?

G.D.P., JOBS AND OTHER INDICATORS HAVE ALL RISEN MORE SLOWLY UNDER REPUBLICANS FOR NEARLY THE PAST CENTURY.(ARTICLE BELOW)

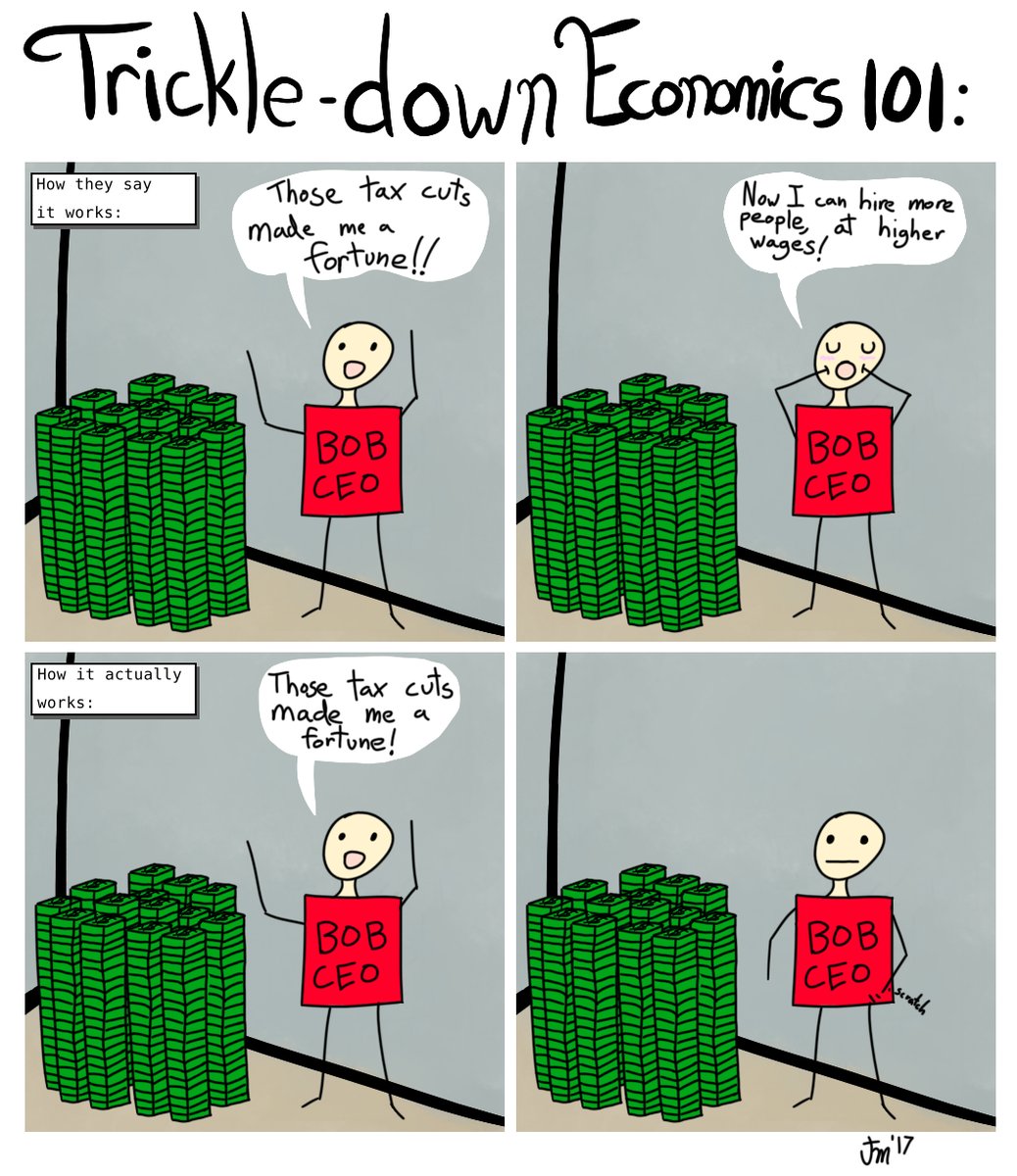

*STUDY OF 50 YEARS OF TAX CUTS FOR RICH CONFIRMS ‘TRICKLE DOWN’ THEORY IS AN ABSOLUTE SHAM(ARTICLE BELOW)

*TO REVERSE INEQUALITY, WE NEED TO EXPOSE THE MYTH OF THE ‘FREE MARKET’

(ARTICLE BELOW)

*RAPID TESTING IS LESS ACCURATE THAN THE GOVERNMENT WANTS TO ADMIT

(ARTICLE BELOW)

*Trump’s Wildly Exaggerated Help For Black Voters

(ARTICLE BELOW)

*Powerless Farmworkers Suffer Under Trump’s Anti-Migrant Policies

(ARTICLE BELOW)

*Farmers Are Plagued by Debt and Climate Crisis. Trump Has Made Things Worse.

(ARTICLE BELOW)

*THE SUPER-RICH—YOU KNOW, PEOPLE LIKE THE TRUMPS —ARE RAKING IN BILLIONS

(ARTICLE BELOW)

*Retirements, layoffs, labor force flight may leave scars on U.S economy

(ARTICLE BELOW)

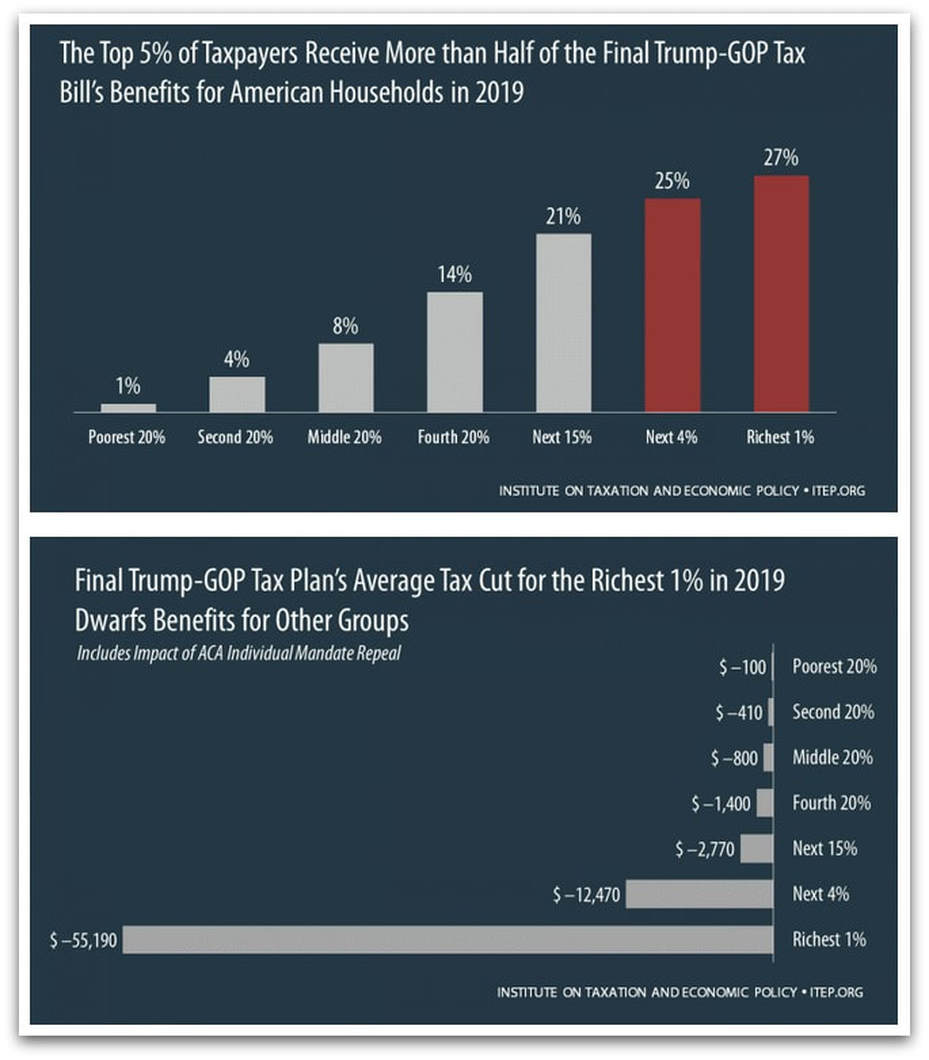

*THE UGLY NUMBERS ARE FINALLY IN ON THE 2017 TRUMP TAX REWRITE

(ARTICLE BELOW)

*THE FED INVESTED PUBLIC MONEY IN FOSSIL FUEL FIRMS DRIVING ENVIRONMENTAL RACISM

(ARTICLE BELOW)

*'WHITE SUPREMACY' WAS BEHIND CHILD SEPARATIONS — AND TRUMP OFFICIALS WENT ALONG, CRITICS SAY(ARTICLE BELOW)

*N.Y. NURSES SAY USED N95 MASKS ARE BEING RE-PACKED IN BOXES TO LOOK NEW

(ARTICLE BELOW)

*HOW MUCH DOES UNION MEMBERSHIP BENEFIT AMERICA'S WORKERS?

(ARTICLE BELOW)

*American billionaires’ ties to Moscow go back decades

(ARTICLE BELOW)

*‘Eye-popping’ analysis shows top one percent gained $21 trillion in wealth since 1989 while bottom half lost $900 billion(ARTICLE BELOW)

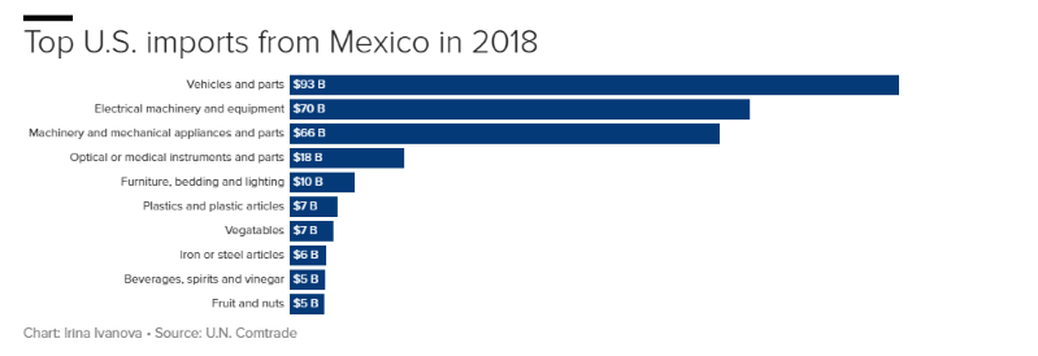

*Here's what the US imports from Mexico

(ARTICLE BELOW)

*AMERICA’S BIGGEST LIE

(ARTICLE BELOW)

*CAPITALISM AND DEMOCRACY: THE STRAIN IS SHOWING(ARTICLE BELOW)

*ALMOST TWO-THIRDS OF PEOPLE IN THE LABOR FORCE DO NOT HAVE A COLLEGE DEGREE, AND THEIR JOB PROSPECTS ARE DIMMING(ARTICLE BELOW)

*TOP 10 WAYS THE US IS THE MOST CORRUPT COUNTRY IN THE WORLD(ARTICLE BELOW)

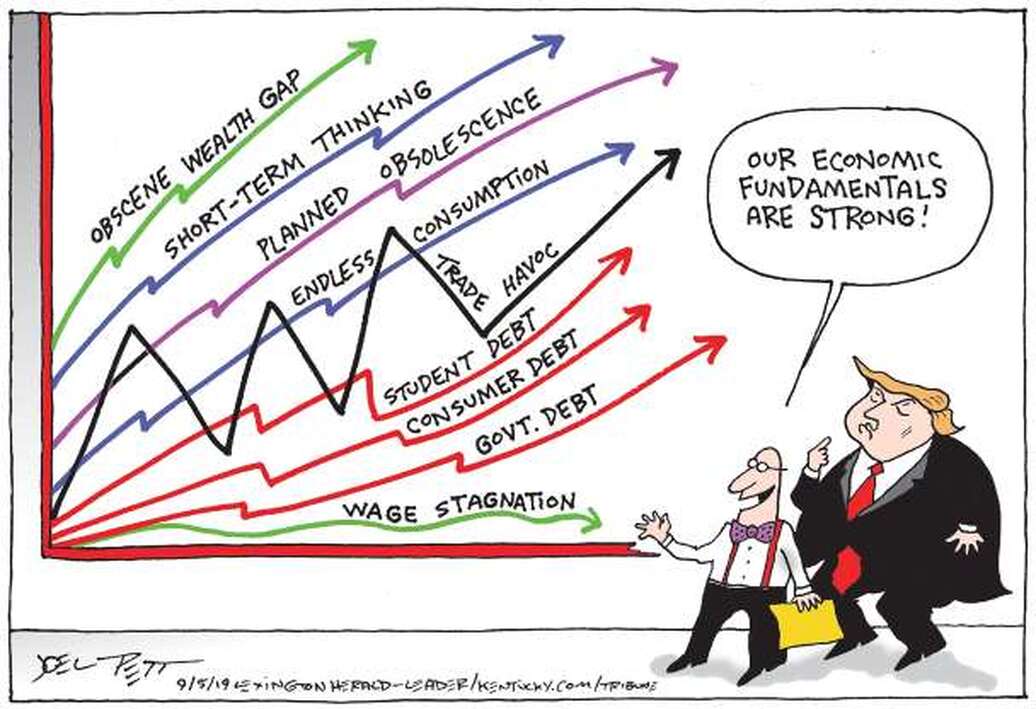

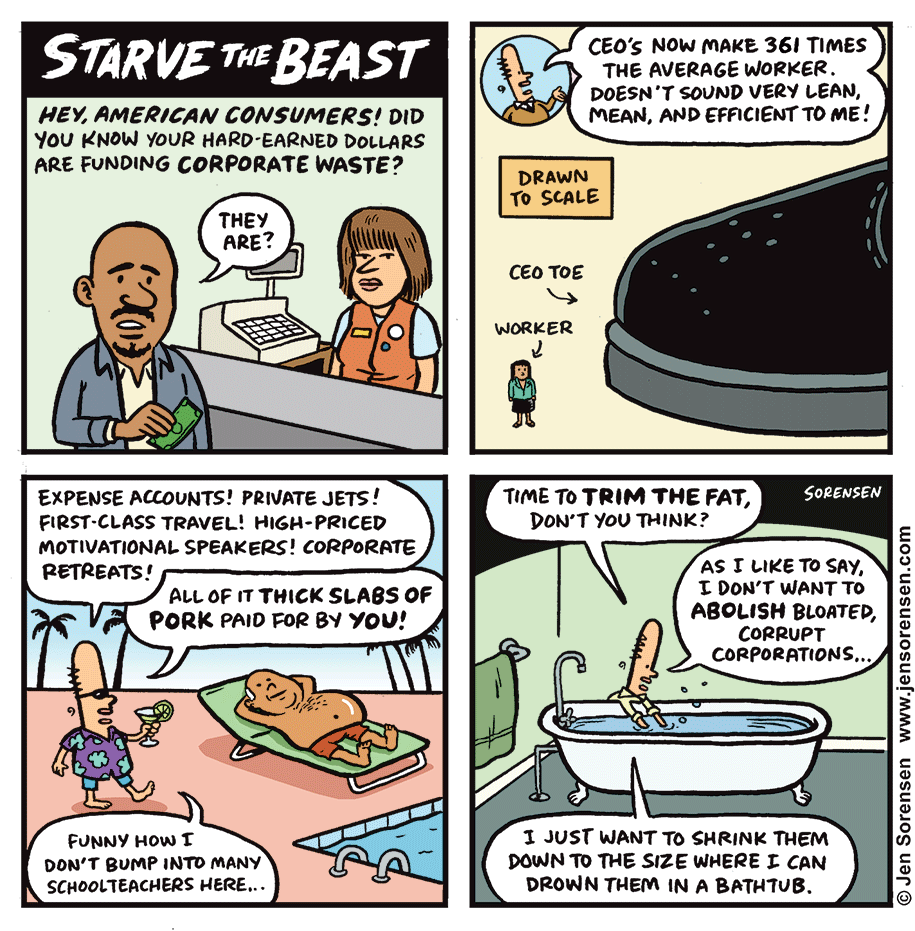

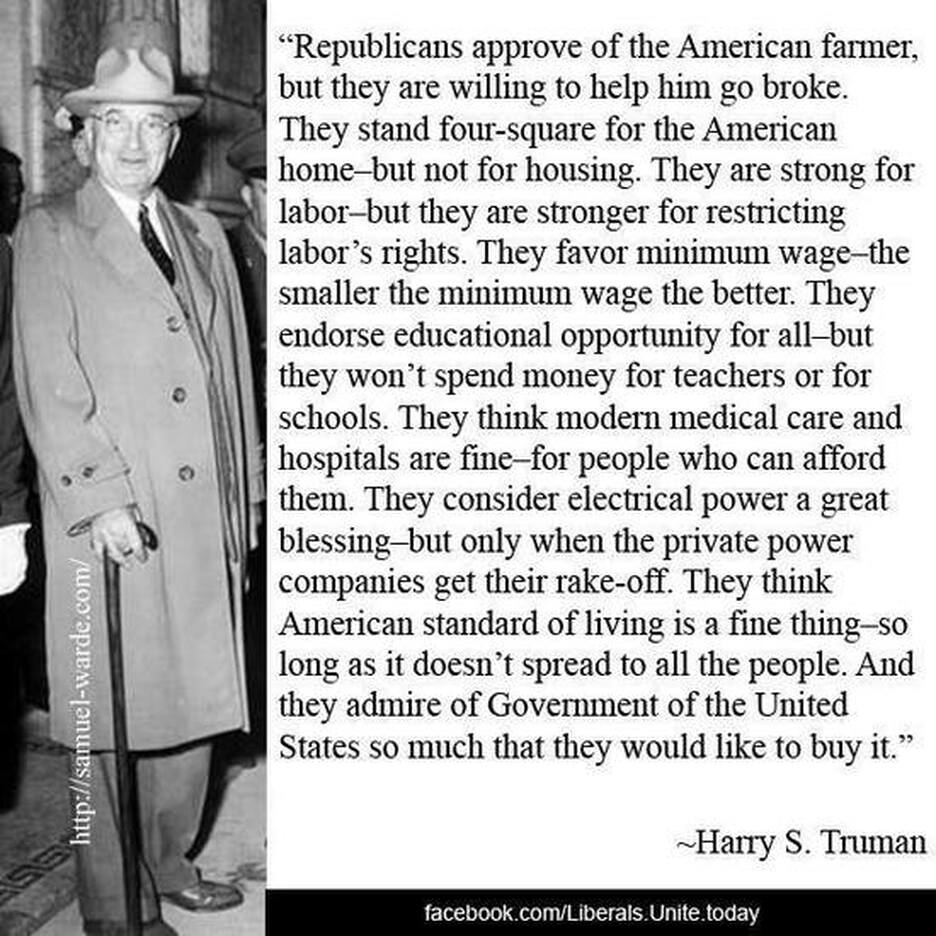

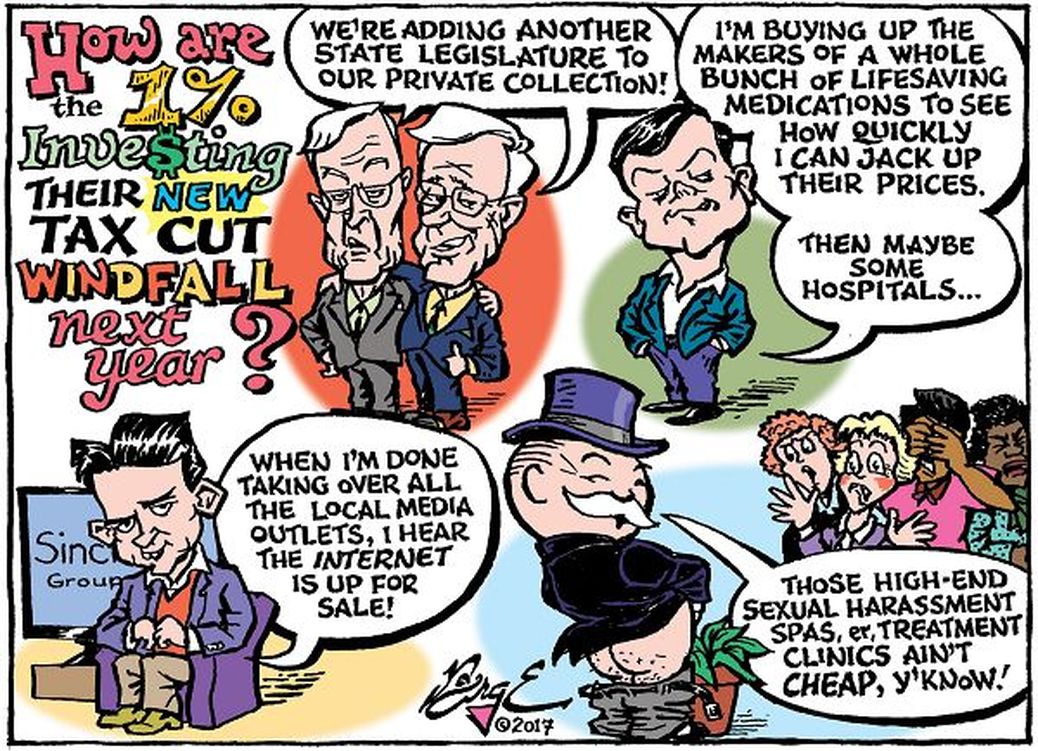

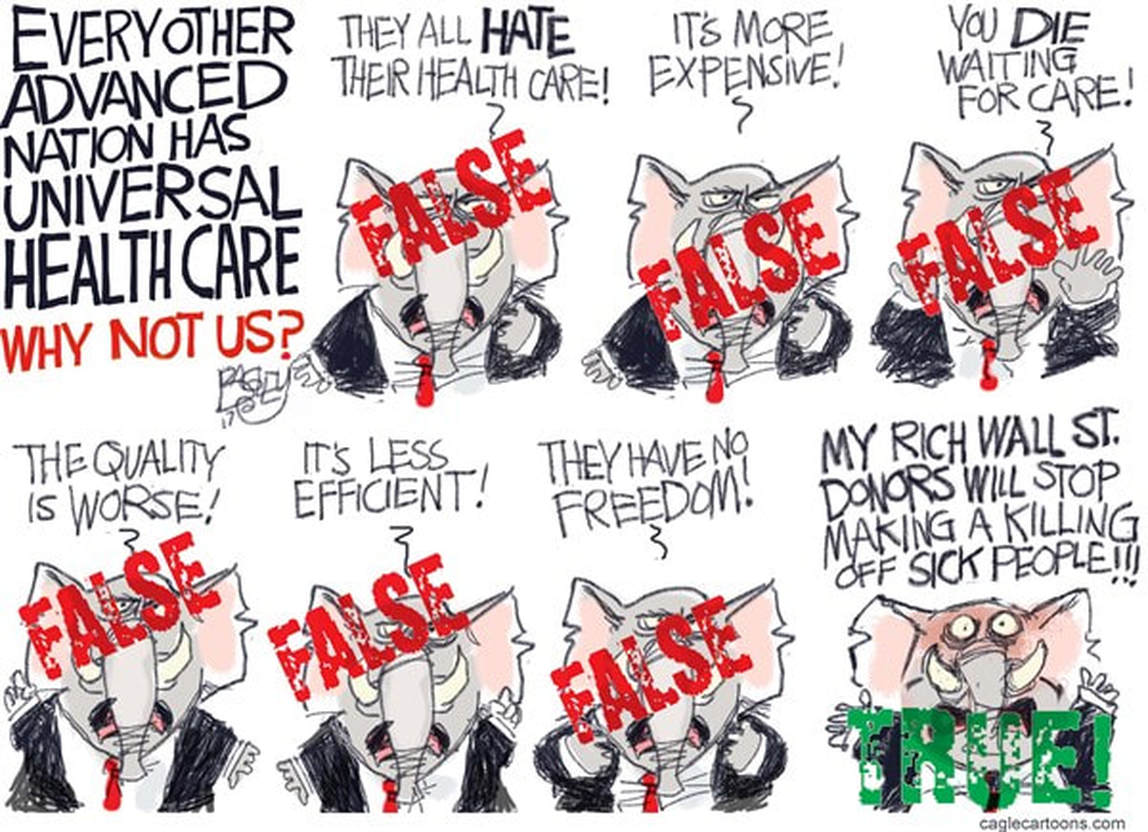

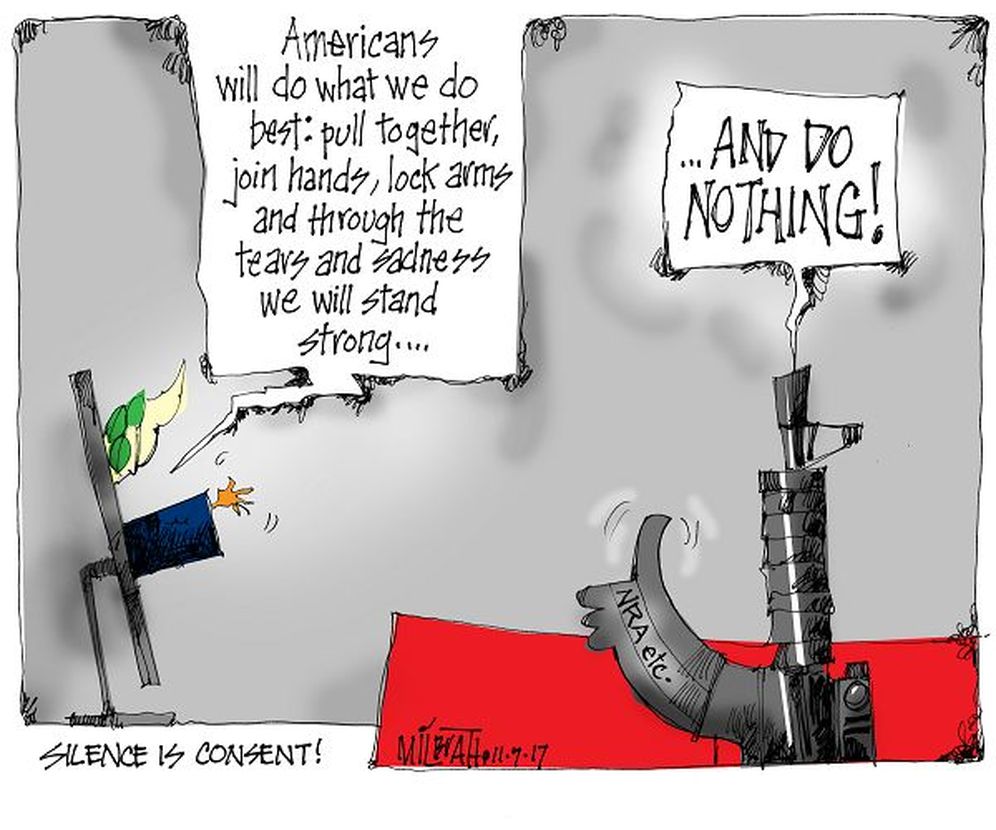

*reality funnies and charts(below)

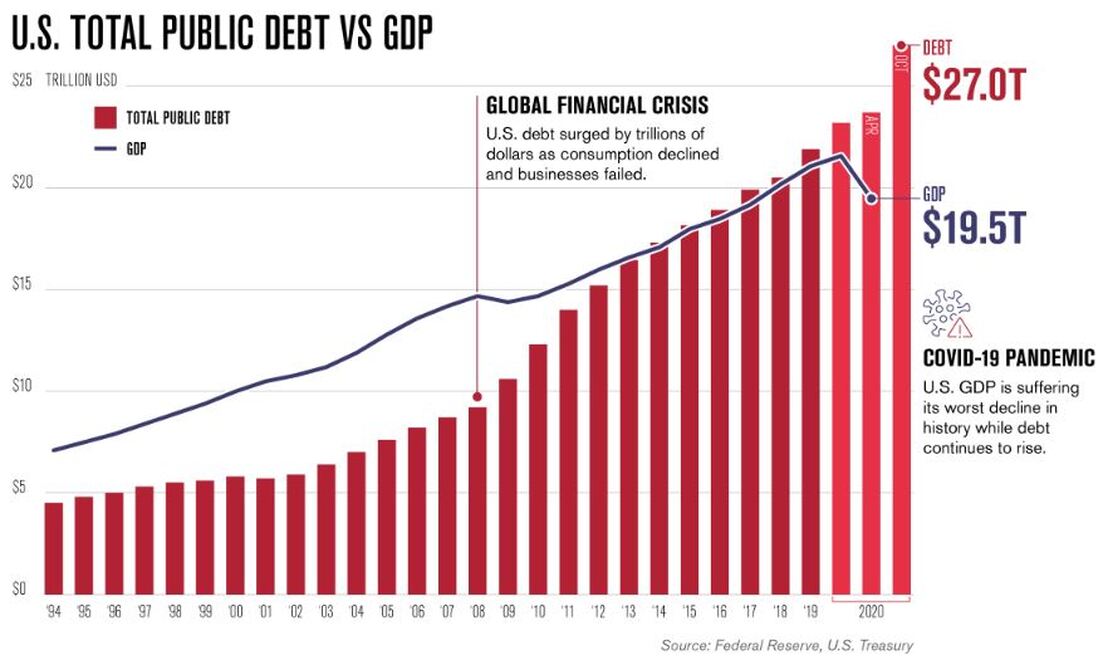

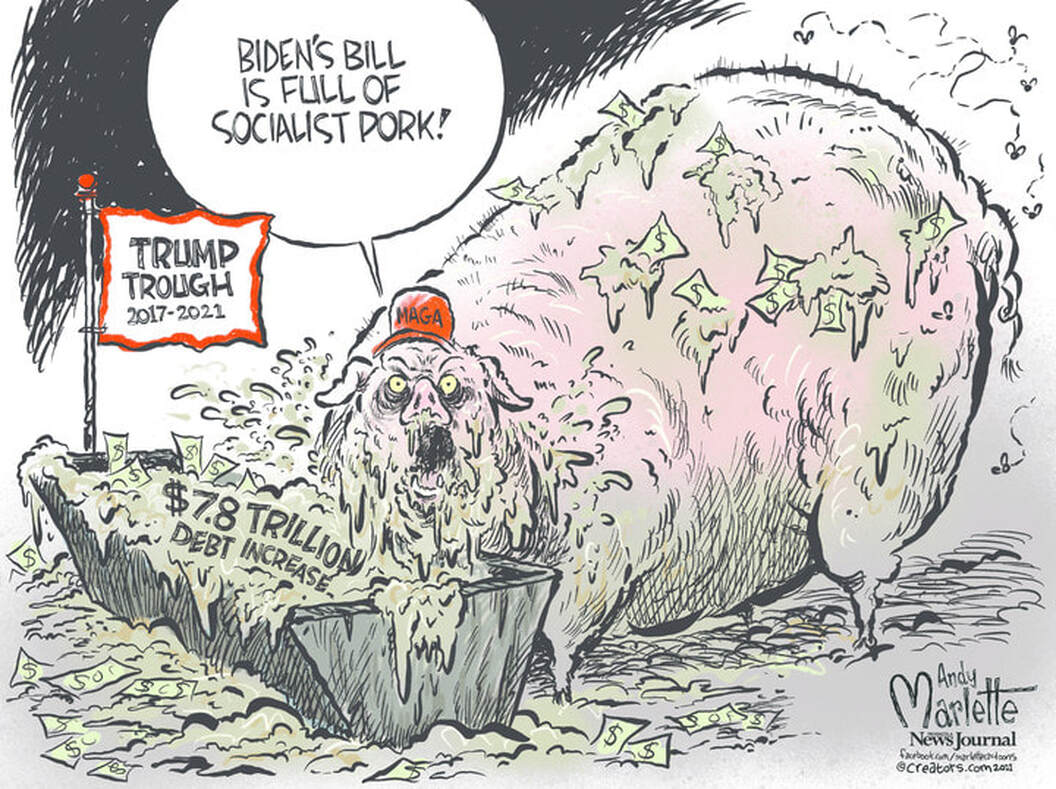

Trump ran up national debt twice as much as Biden: new analysis

Neil Irwin - axios

Former President Trump ran up the national debt by about twice as much as President Biden, according to a new analysis of their fiscal track records.

Why it matters: The winner of November's election faces a gloomy fiscal outlook, with rapidly rising debt levels at a time when interest rates are already high and demographic pressure on retirement programs is rising.

By the numbers: Trump added $8.4 trillion in borrowing over a ten-year window, CRFB finds in a report out this morning.

State of play: For Trump, the biggest non-COVID drivers of higher public debt were his signature tax cuts enacted in 2017 (causing $1.9 trillion in additional borrowing) and bipartisan spending packages (which added $2.1 trillion).

Between the lines: Deficit politics may return to the forefront of U.S. policy debates next year.

What they're saying: "The next president will face huge fiscal challenges," CRFB president Maya MacGuineas tells Axios.

Why it matters: The winner of November's election faces a gloomy fiscal outlook, with rapidly rising debt levels at a time when interest rates are already high and demographic pressure on retirement programs is rising.

- Both candidates bear a share of the responsibility, as each added trillions to that tally while in office.

- But Trump's contribution was significantly higher, according to the fiscal watchdogs at the Committee for a Responsible Federal Budget, thanks to both tax cuts and spending deals struck in his four years in the White House.

By the numbers: Trump added $8.4 trillion in borrowing over a ten-year window, CRFB finds in a report out this morning.

- Biden's figure clocks in at $4.3 trillion with seven months remaining in his term.

- If you exclude COVID relief spending from the tally, the numbers are $4.8 trillion for Trump and $2.2 trillion for Biden.

State of play: For Trump, the biggest non-COVID drivers of higher public debt were his signature tax cuts enacted in 2017 (causing $1.9 trillion in additional borrowing) and bipartisan spending packages (which added $2.1 trillion).

- For Biden, major non-COVID factors include 2022 and 2023 spending bills ($1.4 trillion), student debt relief ($620 billion), and legislation to support health care for veterans ($520 billion).

- Biden deficits have also swelled, according to CRFB's analysis, due to executive actions that changed the way food stamp benefits are calculated, expanding Medicaid benefits, and other changes that total $548 billion.

Between the lines: Deficit politics may return to the forefront of U.S. policy debates next year.

- Much of Trump's tax law is set to expire at the end of 2025, and the CBO has estimated that fully extending it would increase deficits by $4.6 trillion over the next decade.

- High interest rates make the taxpayer burden of both existing and new debt higher than it was during the era of near-zero interest rates.

- And the Social Security trust fund is rapidly hurtling toward depletion in 2033, which would trigger huge cuts in the retirement benefits absent Congressional action.

What they're saying: "The next president will face huge fiscal challenges," CRFB president Maya MacGuineas tells Axios.

- "Yet both candidates have track records of approving trillions in new borrowing even setting aside the justified borrowing for COVID, and neither has proposed a comprehensive and credible plan to get the debt under control," she said.

- "No president is fully responsible for the fiscal challenges that come along, but they need to use the bully pulpit to set the stage for making some hard choices," MacGuineas said.

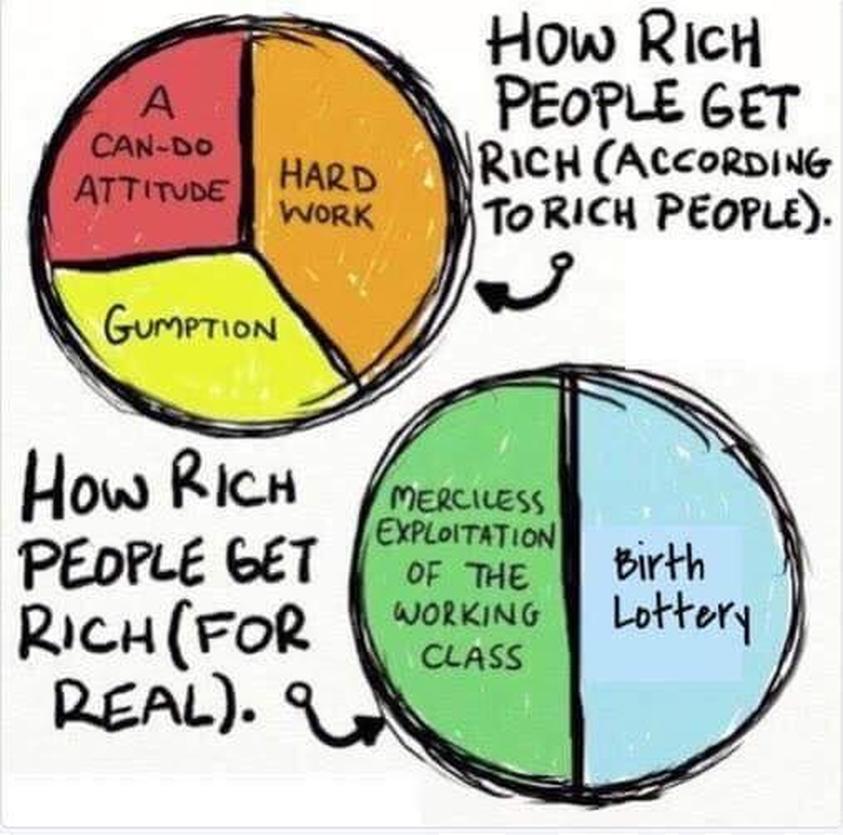

Robert Reich debunks the myth that 'the rich deserve to be rich'

Robert Reich - alternet

June 14, 2024

Don’t be fooled by the myth that people are paid what they’re “worth” — that the rich deserve their ever-increasing incomes and wealth because they’re worth far more to the economy now than years ago (when the incomes and wealth of those at the top were more modest relative to everyone else’s).

The distribution of income and wealth increasingly depend on who has the power to set the rules of the game.

Those at the top are raking in record income and wealth compared to everyone else because:

1. CEOs have linked their pay to the stock market through stock options. They then use corporate stock buybacks to increase stock prices and time the sale of their options to those increases.

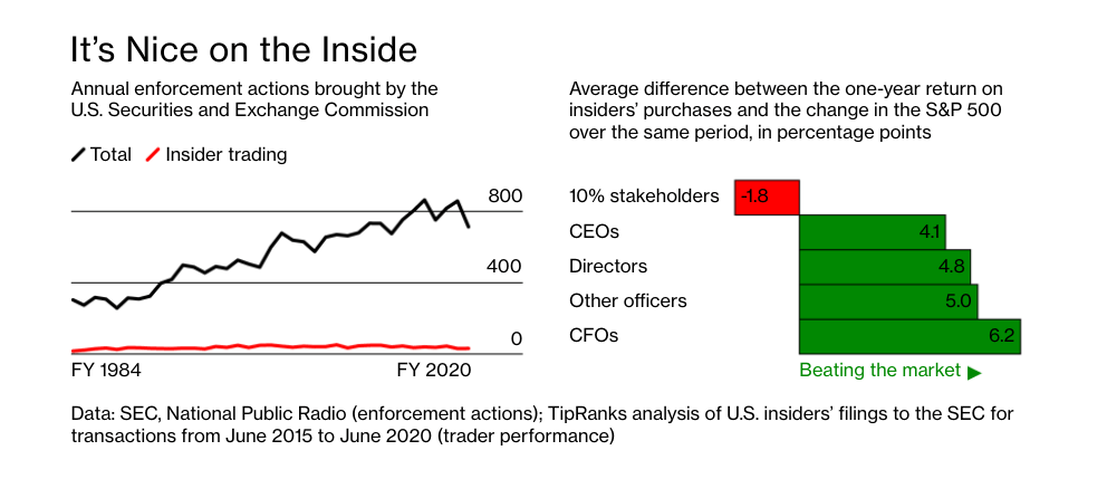

2. They get inside information about corporate profits and losses before the rest of the public and trade on that insider information. This is especially true of hedge fund managers, who specialize in getting insider information before other investors.

3. They create or work for companies that have monopolized their markets. This enables them to charge consumers higher prices than if they had to compete for those consumers. And it lets them keep wages low, because workers have fewer options of whom to work for.

4. They use their political influence to get changes in laws, regulations, and taxes that benefit themselves and their corporations, while harming those without this kind of influence — especially smaller competitors, consumers, and workers.

5. They were born into (or married into) wealth. These days, the most important predictor of someone’s future income and wealth in America is the income and wealth of their parents. Sixtypercent of all wealth is inherited. And we’re on the cusp of the biggest intergenerational transfer of wealth in history, from rich boomers to their children.

None of these reasons for the explosion of incomes and wealth at the top has anything to do with worth or merit. They have to do with power — or the power of one’s parents.

Meanwhile, the pay of average workers has stagnated because they have lost economic power and the political influence that goes with it. Corporations have kept a lid on wages by outsourcing work abroad, replacing workers with software, and preventing workers from unionizing.

Bottom line: Wealth doesn’t measure how hard someone has worked or what they deserve. It measures how well our economic system has worked for them.

The distribution of income and wealth increasingly depend on who has the power to set the rules of the game.

Those at the top are raking in record income and wealth compared to everyone else because:

1. CEOs have linked their pay to the stock market through stock options. They then use corporate stock buybacks to increase stock prices and time the sale of their options to those increases.

2. They get inside information about corporate profits and losses before the rest of the public and trade on that insider information. This is especially true of hedge fund managers, who specialize in getting insider information before other investors.

3. They create or work for companies that have monopolized their markets. This enables them to charge consumers higher prices than if they had to compete for those consumers. And it lets them keep wages low, because workers have fewer options of whom to work for.

4. They use their political influence to get changes in laws, regulations, and taxes that benefit themselves and their corporations, while harming those without this kind of influence — especially smaller competitors, consumers, and workers.

5. They were born into (or married into) wealth. These days, the most important predictor of someone’s future income and wealth in America is the income and wealth of their parents. Sixtypercent of all wealth is inherited. And we’re on the cusp of the biggest intergenerational transfer of wealth in history, from rich boomers to their children.

None of these reasons for the explosion of incomes and wealth at the top has anything to do with worth or merit. They have to do with power — or the power of one’s parents.

Meanwhile, the pay of average workers has stagnated because they have lost economic power and the political influence that goes with it. Corporations have kept a lid on wages by outsourcing work abroad, replacing workers with software, and preventing workers from unionizing.

Bottom line: Wealth doesn’t measure how hard someone has worked or what they deserve. It measures how well our economic system has worked for them.

Shattering deceptive mirrors: Younger generations have the chance to buck the beauty industry scam

The Millennial aunties’ letter to our Gen Z nieces: don’t let cosmetic companies win

By RAE HODGE - salon

Staff Reporter

PUBLISHED JANUARY 22, 2024 5:30AM (EST)

My darling Gen Z girls, it’s your aging Millennial aunties here. The childless, over-educated divorcees in skinny jeans who dyed your hair with Manic Panic, bought your first deck of tarot cards, drove you to the Women’s March and didn’t tell your parents about the pot (so long as you kept your grades up.) We’re so proud of you. You’ve shouldered burdens far heavier than we did at your age, have keener convictions and are a hundred times funnier. We love you ferociously. And now that you’re coming of age, as 23% of the world’s population and taking the lead in consumer spending, you’re finally ready to learn the most ancient Millennial art: how to brutally murder a luxury industry.

You’ve been treated with contempt by cosmetics companies. And they’ve gotten away with it too long. Enabled by insidious social media algorithms and inescapable surveillance of data-broker ad-tech, they subject you to billion-dollar psychological manipulation campaigns to keep you scrolling and buying crap you don’t need. The latest trend is “skin care” snake oil. Cosmetics companies have done little more than make so many of you starve, hide and hate yourselves. These companies deserve to die — so let’s kill them.

You’ve been treated with contempt by cosmetics companies. And they’ve gotten away with it too long. Enabled by insidious social media algorithms and inescapable surveillance of data-broker ad-tech, they subject you to billion-dollar psychological manipulation campaigns to keep you scrolling and buying crap you don’t need. The latest trend is “skin care” snake oil. Cosmetics companies have done little more than make so many of you starve, hide and hate yourselves. These companies deserve to die — so let’s kill them.

And who better to show you how than us? You see, the Boomers may not have realized it at the time, but when they plunged us into two Bushes and four recessions they turned Millennials into the apex predators of America’s economic ecosystem. A bit like the 40-year-old vagrant Wolverine from X-Men, we’re the eerily resilient PTSD byproducts of military-industrial experiments, filled with anger issues and toxic metals. We can’t pass down any financial tools (your Gen X grandparents got the last) but we can give you our deadliest financial weapon: The ability to break those who make you broke.

Grooming us for makeup

A 2023 Lending Tree survey of 1,950 US consumers found Millennials spend about $2,670 and Gen Z spend about $2,048 annually on beauty products. Mostly, it’s cleansers, toners and serums. Social media influenced 67% of Millennials’ and 64% of Gen Z’ers’ purchases. But you ladies are sharp. About 31% of Gen Z knows online skinfluencers are full of it.

Back in 2017, one marketing whitepaper found 68% of Gen Z girls felt “appearance is a somewhat or very significant source of stress.” Now that burden is laid on Gen Alpha girls, born 2010 and later.

“With the US beauty market reaching an impressive $71.5 billion in 2022, experiencing remarkable 6.1% year-over-year growth, there is immense potential to capitalize on the current inclusivity zeitgeist,” wrote Coresight Research last year, citing a survey of 5,690 teenagers with an average age of 16.

″[We] know from some of our proprietary research, as we enter into the holiday season, that skin care is one of the categories that is at the top of their list,” Ulta Beauty’s chief merchandizing officer told CNBC of Gen Alpha.

So do your aunties — and your younger sisters — one favor. Take a look at the skin care products used by tweens in the pictures of that CNBC article. Notice the shapes and colors of the packaging, how they are designed to be held and applied. Look at how the bottles and objects are visually indistinguishable from lipstick, eyeliner, mascara and foundation. It’s plain as day: the kid-targeted skin care cosmetic genre is just training wheels for makeup, disguised as being healthful rather than alluring, in order to avoid alarming your mothers and aunties.

“63% of female teenagers are in Ulta Beauty’s Ultamate Rewards Program,” wrote Coresight Research. “Teens’ core beauty wallet (cosmetics, skincare and fragrance) stands at $313 annually, a 19% year-over-year increase. This increase was driven by a 32% annual increase in spending on cosmetics, up to $123 annually … surpassing skincare spending for the first time since 2020.”

These companies know exactly what they’re doing. They’re grooming girls for makeup by easing them into it with “skin care” snake-oil. And it’s working. It worked on us. It worked on our parents. It worked on you. And now it’s working on your younger sisters.

A machine for self-hatred

We don’t want to preach about social media like hypocrites. But you’ve got to know what you’re up against, and we’d never ask you to stand on anything without receipts. It’s not hard to find peer-reviewed studies confirming links between social media, unhealthy body image and mental health problems in girls. They’ve spiked since COVID-19 lockdowns pushed more kids online.

In the Irish Journal of Psychological Medicine in 2020, researchers found girls’ body dissatisfaction directly related to time spent on social media. A 2022 study from the University of Delaware found teen girls’ body anxiety connected to other depressive symptoms (with a towering citation list). Studies in Obesity Reviews and Current Psychology found associations between social media exposure, mental health and teen diet in 2023. The same year, a Clinics in Dermatology study found social media can “hinder body dysmorphic disorder patient treatment, leading to excessive use of cosmetic procedures.”

Unsurprisingly, a 2023 review of 21 articles in the Journal of Psychosocial Nursing and Mental Health Services concurred, as did a 2023 review in PLOS Global Public Health.

“Evidence from 50 studies in 17 countries indicates that social media usage leads to body image concerns, eating disorders/disordered eating and poor mental health,” researchers wrote.

Finally, University of Western Australia researchers said in 2022:

“Adolescent girls appear more vulnerable to experiencing mental health difficulties from social media use than boys ... Sexual objectification through images may reinforce to adolescent girls that their value is based on their appearance.”

Are we saying abandon all social media? Not at all. We know you often have to be there. We do too. But caveat emptor, as they say — or “buyer beware” for those of you whose schools slashed Latin studies. Online platforms are Rube Goldberg machines for self-hatred. They pimp our attention spans to companies paying for ads — no matter how it harms our mental health— and then train us to perform for perpetual surveillance. Never underestimate their greed, never forget they conspire with the enemy.

Enough is enough. Makeup for fun and artistry sake is one thing, but we’ve lost too much money and self-esteem to digital con-artists who call us ugly. Your murderous Millennial aunties are with you. Now, let’s rip this industry apart and use its blood for lipstick.

You’ve been treated with contempt by cosmetics companies. And they’ve gotten away with it too long. Enabled by insidious social media algorithms and inescapable surveillance of data-broker ad-tech, they subject you to billion-dollar psychological manipulation campaigns to keep you scrolling and buying crap you don’t need. The latest trend is “skin care” snake oil. Cosmetics companies have done little more than make so many of you starve, hide and hate yourselves. These companies deserve to die — so let’s kill them.

You’ve been treated with contempt by cosmetics companies. And they’ve gotten away with it too long. Enabled by insidious social media algorithms and inescapable surveillance of data-broker ad-tech, they subject you to billion-dollar psychological manipulation campaigns to keep you scrolling and buying crap you don’t need. The latest trend is “skin care” snake oil. Cosmetics companies have done little more than make so many of you starve, hide and hate yourselves. These companies deserve to die — so let’s kill them.

And who better to show you how than us? You see, the Boomers may not have realized it at the time, but when they plunged us into two Bushes and four recessions they turned Millennials into the apex predators of America’s economic ecosystem. A bit like the 40-year-old vagrant Wolverine from X-Men, we’re the eerily resilient PTSD byproducts of military-industrial experiments, filled with anger issues and toxic metals. We can’t pass down any financial tools (your Gen X grandparents got the last) but we can give you our deadliest financial weapon: The ability to break those who make you broke.

Grooming us for makeup

A 2023 Lending Tree survey of 1,950 US consumers found Millennials spend about $2,670 and Gen Z spend about $2,048 annually on beauty products. Mostly, it’s cleansers, toners and serums. Social media influenced 67% of Millennials’ and 64% of Gen Z’ers’ purchases. But you ladies are sharp. About 31% of Gen Z knows online skinfluencers are full of it.

Back in 2017, one marketing whitepaper found 68% of Gen Z girls felt “appearance is a somewhat or very significant source of stress.” Now that burden is laid on Gen Alpha girls, born 2010 and later.

“With the US beauty market reaching an impressive $71.5 billion in 2022, experiencing remarkable 6.1% year-over-year growth, there is immense potential to capitalize on the current inclusivity zeitgeist,” wrote Coresight Research last year, citing a survey of 5,690 teenagers with an average age of 16.

″[We] know from some of our proprietary research, as we enter into the holiday season, that skin care is one of the categories that is at the top of their list,” Ulta Beauty’s chief merchandizing officer told CNBC of Gen Alpha.

So do your aunties — and your younger sisters — one favor. Take a look at the skin care products used by tweens in the pictures of that CNBC article. Notice the shapes and colors of the packaging, how they are designed to be held and applied. Look at how the bottles and objects are visually indistinguishable from lipstick, eyeliner, mascara and foundation. It’s plain as day: the kid-targeted skin care cosmetic genre is just training wheels for makeup, disguised as being healthful rather than alluring, in order to avoid alarming your mothers and aunties.

“63% of female teenagers are in Ulta Beauty’s Ultamate Rewards Program,” wrote Coresight Research. “Teens’ core beauty wallet (cosmetics, skincare and fragrance) stands at $313 annually, a 19% year-over-year increase. This increase was driven by a 32% annual increase in spending on cosmetics, up to $123 annually … surpassing skincare spending for the first time since 2020.”

These companies know exactly what they’re doing. They’re grooming girls for makeup by easing them into it with “skin care” snake-oil. And it’s working. It worked on us. It worked on our parents. It worked on you. And now it’s working on your younger sisters.

A machine for self-hatred

We don’t want to preach about social media like hypocrites. But you’ve got to know what you’re up against, and we’d never ask you to stand on anything without receipts. It’s not hard to find peer-reviewed studies confirming links between social media, unhealthy body image and mental health problems in girls. They’ve spiked since COVID-19 lockdowns pushed more kids online.

In the Irish Journal of Psychological Medicine in 2020, researchers found girls’ body dissatisfaction directly related to time spent on social media. A 2022 study from the University of Delaware found teen girls’ body anxiety connected to other depressive symptoms (with a towering citation list). Studies in Obesity Reviews and Current Psychology found associations between social media exposure, mental health and teen diet in 2023. The same year, a Clinics in Dermatology study found social media can “hinder body dysmorphic disorder patient treatment, leading to excessive use of cosmetic procedures.”

Unsurprisingly, a 2023 review of 21 articles in the Journal of Psychosocial Nursing and Mental Health Services concurred, as did a 2023 review in PLOS Global Public Health.

“Evidence from 50 studies in 17 countries indicates that social media usage leads to body image concerns, eating disorders/disordered eating and poor mental health,” researchers wrote.

Finally, University of Western Australia researchers said in 2022:

“Adolescent girls appear more vulnerable to experiencing mental health difficulties from social media use than boys ... Sexual objectification through images may reinforce to adolescent girls that their value is based on their appearance.”

Are we saying abandon all social media? Not at all. We know you often have to be there. We do too. But caveat emptor, as they say — or “buyer beware” for those of you whose schools slashed Latin studies. Online platforms are Rube Goldberg machines for self-hatred. They pimp our attention spans to companies paying for ads — no matter how it harms our mental health— and then train us to perform for perpetual surveillance. Never underestimate their greed, never forget they conspire with the enemy.

Enough is enough. Makeup for fun and artistry sake is one thing, but we’ve lost too much money and self-esteem to digital con-artists who call us ugly. Your murderous Millennial aunties are with you. Now, let’s rip this industry apart and use its blood for lipstick.

Bottled water contains hundreds of thousands of plastic bits: study

Agence France-Presse - raw story

January 9, 2024 6:53AM ET

Bottled water is up to a hundred times worse than previously thought when it comes to the number of tiny plastic bits it contains, a new study in the Proceedings of the National Academy of Sciences said Monday.

Using a recently invented technique, scientists counted on average 240,000 detectable fragments of plastic per liter of water in popular brands -- between 10-100 times higher than prior estimates -- raising potential health concerns that require further study.

"If people are concerned about nanoplastics in bottled water, it's reasonable to consider alternatives like tap water," Beizhan Yan, an associate research professor of geochemistry at Columbia University and a co-author of the paper told AFP.

But he added: "We do not advise against drinking bottled water when necessary, as the risk of dehydration can outweigh the potential impacts of nanoplastics exposure."

There has been rising global attention in recent years on microplastics, which break off from bigger sources of plastic and are now found everywhere from the polar ice caps to mountain peaks, rippling through ecosystems and finding their way into drinking water and food.

While microplastics are anything under 5 millimeters, nanoplastics are defined as particles below 1 micrometer, or a billionth of a meter -- so small they can pass through the digestive system and lungs, entering the bloodstream directly and from there to organs, including the brain and heart. They can also cross the placenta into the bodies of unborn babies.

There is limited research on their impacts on ecosystems and human health, though some early lab studies have linked them to toxic effects, including reproductive abnormalities and gastric issues.

To study nanoparticles in bottled water, the team used a technique called Stimulated Raman Scattering (SRS) microscopy, which was recently invented by one of the paper's co-authors, and works by probing samples with two lasers tuned to make specific molecules resonate, revealing what they are to a computer algorithm.

They tested three leading brands but chose not to name them, "because we believe all bottled water contain nanoplastics, so singling out three popular brands could be considered unfair," said Yan.

The results showed between 110,000 to 370,000 particles per liter, 90 percent of which were nanoplastics while the rest were microplastics.

The most common type was nylon -- which probably comes from plastic filters used to purify the water-- followed by polyethylene terephthalate or PET, which is what bottles are themselves made from, and leaches out when the bottle is squeezed. Other types of plastic enter the water when the cap is opened and closed.

Next, the team hopes to probe tap water, which has also been found to contain microplastics, though at far lower levels.

related: Bottled Water Contains 240,000 Plastic Particles per Liter, Study Finds

Using a recently invented technique, scientists counted on average 240,000 detectable fragments of plastic per liter of water in popular brands -- between 10-100 times higher than prior estimates -- raising potential health concerns that require further study.

"If people are concerned about nanoplastics in bottled water, it's reasonable to consider alternatives like tap water," Beizhan Yan, an associate research professor of geochemistry at Columbia University and a co-author of the paper told AFP.

But he added: "We do not advise against drinking bottled water when necessary, as the risk of dehydration can outweigh the potential impacts of nanoplastics exposure."

There has been rising global attention in recent years on microplastics, which break off from bigger sources of plastic and are now found everywhere from the polar ice caps to mountain peaks, rippling through ecosystems and finding their way into drinking water and food.

While microplastics are anything under 5 millimeters, nanoplastics are defined as particles below 1 micrometer, or a billionth of a meter -- so small they can pass through the digestive system and lungs, entering the bloodstream directly and from there to organs, including the brain and heart. They can also cross the placenta into the bodies of unborn babies.

There is limited research on their impacts on ecosystems and human health, though some early lab studies have linked them to toxic effects, including reproductive abnormalities and gastric issues.

To study nanoparticles in bottled water, the team used a technique called Stimulated Raman Scattering (SRS) microscopy, which was recently invented by one of the paper's co-authors, and works by probing samples with two lasers tuned to make specific molecules resonate, revealing what they are to a computer algorithm.

They tested three leading brands but chose not to name them, "because we believe all bottled water contain nanoplastics, so singling out three popular brands could be considered unfair," said Yan.

The results showed between 110,000 to 370,000 particles per liter, 90 percent of which were nanoplastics while the rest were microplastics.

The most common type was nylon -- which probably comes from plastic filters used to purify the water-- followed by polyethylene terephthalate or PET, which is what bottles are themselves made from, and leaches out when the bottle is squeezed. Other types of plastic enter the water when the cap is opened and closed.

Next, the team hopes to probe tap water, which has also been found to contain microplastics, though at far lower levels.

related: Bottled Water Contains 240,000 Plastic Particles per Liter, Study Finds

Medicare Advantage Plans: The Hidden Dangers and Threats to Patient Care

Medicare Advantage Plans Prioritize Profits at the Expense of Patient Well-being

JOE MANISCALCO - dc report

12/11/2023

The Medicare open enrollment period — that special time of year when the purveyors of profit-driven Medicare Advantage health insurance plans target retirees for the hard sell — ended this week. And for the first time ever, more than half of all Medicare-eligible recipients nationwide have swallowed the bait and signed up. And what exactly is so wrong with that?

Well, not a thing, opponents charge. Unless, of course, you get sick and prefer not to have some corporate bean counter determine whether or not you can get the test or begin the treatment your doctor prescribes.

It could also be a big problem if you happen to like your doctor and the specialist they recommend you see — but you later learn are not “in network,” or have decided to drop Medicare Advantage all together because they simply cannot tolerate all the built in frustration and bureaucratic hassle.

Private health insurance companies, in fact, are great at touting the lower out-of-pocket costs and SilverSneaker perks associated with profit-driven Medicare Advantage plans. But, they are loath to talk about the narrowed pool of available physicians Medicare Advantage recipients can actually see, and the prior authorizations that are erected between Medicare Advantage recipients and the care they need — all in the never-ending pursuit of profit.

Across the country, an increasing number of physicians are reporting the prior authorizations that come with profit-driven Medicare Advantage plans have, indeed, become a “nightmare” for both themselves and the patients they are attempting to treat.

Just last month, the 125-year-old American Hospital Association (AHA) loudly decried and sought redress from the “inappropriate denials of medically necessary care” that come with Medicare Advantage plans.

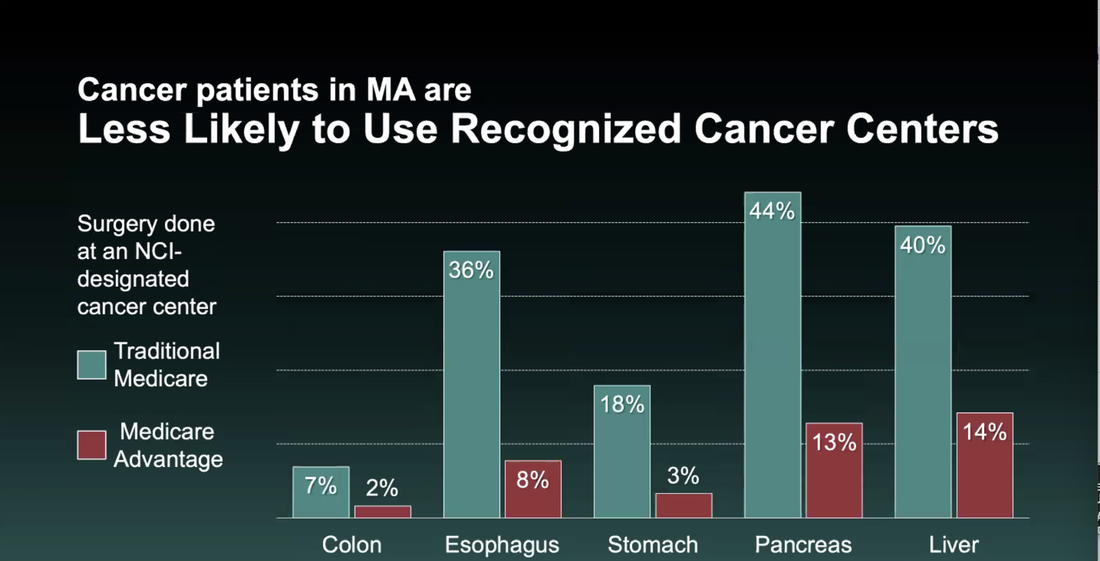

More frighteningly still, Physicians for a National Health Program (PNHP) point to data showing Medicare Advantage patients are being forced to wait longer for cancer surgery than those using authentic or “traditional” Medicare.

“And on top of having to wait longer for cancer surgery then patients with traditional Medicare,” Dr. Claudia Fegan, chief medical officer at Cook County Health in Illinois says, “cancer patients in Medicare Advantage plans are far less likely to use a National Cancer Institute–designated Center of Excellence.”

That means cancer patients enrolled in profit-driven Medicare Advantage plans are more likely to have their surgeries done at hospitals far less experienced in performing complex oncological procedures.

“It turns out,” Dr. Fegan says, “these delays in getting treatment and the redirection to the less experienced hospitals, is literally killing patients.”

Astonishingly, it gets worse, Medicare Advantage foes insist.

Medicare Advantage purveyors including UnitedHealthcare and Cigna are currently facing class action lawsuits for allegedly using AI (Artificial Intelligence) to further deny Medicare Advantage recipients vital, necessary care — again, all in the name of profit.

The ongoing drive to funnel Medicare eligible retirees into privatized health insurance plans has sparked a nationwide revolt, however.

Municipal retirees from coast-to-coast who were promised traditional Medicare benefits when they initially signed up for civil service are leading the fight against privatization and refusing to allow Medicare-eligible recipients to be herded into profit-driven Medicare Advantage plans.

And they are winning.

This past summer, a New York State Supreme Court Justice permanently blocked the City of New York, and embattled Mayor Eric Adams from stripping municipal retirees of their traditional Medicare benefits and pushing them into a profit-driven Medicare Advantage plan administered by insurance giant Aetna.

Public service retirees in Delaware, too, have also been successful blocking efforts in their state to push them into a privatized Medicare Advantage plan.

A Stay Order issued in Delaware Superior Court blocking Governor John Carney’s administration from herding public service retirees into Medicare Advantage was issued on October 19, 2022, and remains in effect despite ongoing efforts to have it overturned.

Municipal retirees from New York, Delaware, Seattle and other places around the country, in fact, are in the process of organizing into a potent united front against the Medicare Advantage push they say not only harms recipients, but threatens the survival of traditional Medicare itself.

Medicare Advantage foes were celebrated as national heroes during a “Save Medicare” rally held in front of the U.S. Capitol steps on July 27.

In September, U.S Representatives Ritchie Torres (NY-15) and Nicole Malliotakis (NY-11) introduced bipartisan legislation aimed at stopping employers nationwide from pushing retirees into profit-driven Medicare Advantage programs.

In addition to threatening the health of elderly recipients and undermining traditional Medicare, opponents of privatization have long maintained that organize labor’s sloppy embrace of Medicare Advantage will ultimately prove disastrous for an already beleaguered labor movement.

Why would anyone want to join a union, retired civil servants argue, if after a lifetime of paying dues and advancing union solidarity, union leadership can simply “sell off everything that they have” in retirement.

“That is a travesty, and that will cause the end of the Labor Movement,” Marianne Pizzitola, president of the New York City Organization of Public Service Retirees said in June.

Veteran single payer advocate Kay Tillow insists union leaders scrambling to find a solution to soaring healthcare costs by acquiescing to privatization and the Medicare Advantage push are making a huge mistake.

“They’ve chosen [a solution] that takes us backward and will hurt their future retirees as well — and it’s going to destroy medicare in the meantime,” Tillow said.

Well, not a thing, opponents charge. Unless, of course, you get sick and prefer not to have some corporate bean counter determine whether or not you can get the test or begin the treatment your doctor prescribes.

It could also be a big problem if you happen to like your doctor and the specialist they recommend you see — but you later learn are not “in network,” or have decided to drop Medicare Advantage all together because they simply cannot tolerate all the built in frustration and bureaucratic hassle.

Private health insurance companies, in fact, are great at touting the lower out-of-pocket costs and SilverSneaker perks associated with profit-driven Medicare Advantage plans. But, they are loath to talk about the narrowed pool of available physicians Medicare Advantage recipients can actually see, and the prior authorizations that are erected between Medicare Advantage recipients and the care they need — all in the never-ending pursuit of profit.

Across the country, an increasing number of physicians are reporting the prior authorizations that come with profit-driven Medicare Advantage plans have, indeed, become a “nightmare” for both themselves and the patients they are attempting to treat.

Just last month, the 125-year-old American Hospital Association (AHA) loudly decried and sought redress from the “inappropriate denials of medically necessary care” that come with Medicare Advantage plans.

More frighteningly still, Physicians for a National Health Program (PNHP) point to data showing Medicare Advantage patients are being forced to wait longer for cancer surgery than those using authentic or “traditional” Medicare.

“And on top of having to wait longer for cancer surgery then patients with traditional Medicare,” Dr. Claudia Fegan, chief medical officer at Cook County Health in Illinois says, “cancer patients in Medicare Advantage plans are far less likely to use a National Cancer Institute–designated Center of Excellence.”

That means cancer patients enrolled in profit-driven Medicare Advantage plans are more likely to have their surgeries done at hospitals far less experienced in performing complex oncological procedures.

“It turns out,” Dr. Fegan says, “these delays in getting treatment and the redirection to the less experienced hospitals, is literally killing patients.”

Astonishingly, it gets worse, Medicare Advantage foes insist.

Medicare Advantage purveyors including UnitedHealthcare and Cigna are currently facing class action lawsuits for allegedly using AI (Artificial Intelligence) to further deny Medicare Advantage recipients vital, necessary care — again, all in the name of profit.

The ongoing drive to funnel Medicare eligible retirees into privatized health insurance plans has sparked a nationwide revolt, however.

Municipal retirees from coast-to-coast who were promised traditional Medicare benefits when they initially signed up for civil service are leading the fight against privatization and refusing to allow Medicare-eligible recipients to be herded into profit-driven Medicare Advantage plans.

And they are winning.

This past summer, a New York State Supreme Court Justice permanently blocked the City of New York, and embattled Mayor Eric Adams from stripping municipal retirees of their traditional Medicare benefits and pushing them into a profit-driven Medicare Advantage plan administered by insurance giant Aetna.

Public service retirees in Delaware, too, have also been successful blocking efforts in their state to push them into a privatized Medicare Advantage plan.

A Stay Order issued in Delaware Superior Court blocking Governor John Carney’s administration from herding public service retirees into Medicare Advantage was issued on October 19, 2022, and remains in effect despite ongoing efforts to have it overturned.

Municipal retirees from New York, Delaware, Seattle and other places around the country, in fact, are in the process of organizing into a potent united front against the Medicare Advantage push they say not only harms recipients, but threatens the survival of traditional Medicare itself.

Medicare Advantage foes were celebrated as national heroes during a “Save Medicare” rally held in front of the U.S. Capitol steps on July 27.

In September, U.S Representatives Ritchie Torres (NY-15) and Nicole Malliotakis (NY-11) introduced bipartisan legislation aimed at stopping employers nationwide from pushing retirees into profit-driven Medicare Advantage programs.

In addition to threatening the health of elderly recipients and undermining traditional Medicare, opponents of privatization have long maintained that organize labor’s sloppy embrace of Medicare Advantage will ultimately prove disastrous for an already beleaguered labor movement.

Why would anyone want to join a union, retired civil servants argue, if after a lifetime of paying dues and advancing union solidarity, union leadership can simply “sell off everything that they have” in retirement.

“That is a travesty, and that will cause the end of the Labor Movement,” Marianne Pizzitola, president of the New York City Organization of Public Service Retirees said in June.

Veteran single payer advocate Kay Tillow insists union leaders scrambling to find a solution to soaring healthcare costs by acquiescing to privatization and the Medicare Advantage push are making a huge mistake.

“They’ve chosen [a solution] that takes us backward and will hurt their future retirees as well — and it’s going to destroy medicare in the meantime,” Tillow said.

Billionaire Philanthropy Is a Scam

A new study describes in grotesque detail the extent to which the ultrarich have perverted the charitable giving industry.

Jason Linkins

the new republic

November 18, 2023/3:00 a.m. ET

Money gets a bad rap in some quarters. It’s said that it “isn’t everything,” that it cannot “buy you happiness,” that loving it is “the root of all evil.” But if there’s one thing that money is absolutely stupendous at doing, it’s solving problems. Naturally, the more money you have, the more problems you can solve. Which is why the fact that we’ve allowed a large portion of an otherwise finite amount of wealth to become concentrated in the hands of an increasing number of billionaire plutocrats is something of a crisis: Since they have all the money, they call the shots on what problems get solved. And the main problem they want to solve is the public relations problem that’s arisen from their terrible ideas.

Naturally, the ultrarich put on a big show of generosity to temper your resolve to claw back their fortunes. Everywhere you look, their philanthropic endeavors thrive: They’re underwriting new academic buildings at the local university, providing the means by which your midsize city can have an orchestra, and furnishing the local hospital with state of the art equipment. And a sizable number of these deep-pocketed providers have banded together to create “The Giving Pledge,” a promise to give away half of their wealth during their lifetimes. It all sounds so pretty! But as a new report from the Institute for Policy Studies finds, these pledgers aren’t following through on their commitments—and the often self-serving nature of their philanthropy is actually making things worse for charitable organizations.

As the IPS notes, the business of being a billionaire—which suffered nary a hiccup during the pandemic—is booming. So one of the challenges that the Giving Pledgers face is that the rate at which they accrue wealth is making their promise harder to fulfill. The 73 pledgers “who were billionaires in 2010 saw their wealth grow by 138 percent, or 224 percent when adjusted for inflation, through 2022,” with combined assets ballooning from $348 billion to $828 billion.

According to the report, those who are making the effort to give aren’t handing their ducats over to normal charities. Instead, they are increasingly putting their money into intermediaries, such as private foundations or Donor Advised Funds, or DAFs. As the IPS notes, donations to “working charities appear to be declining” as foundations and DAFs become the preferred warehouses for philanthropic funds. (As TNR reported recently, DAFs are a favorite vehicle for anonymous donors to fund hate groups—while also pocketing a nice tax break.) This also has spurred some self-serving innovations among the philanthropic class, “such as taking out loans from their foundations or paying themselves hefty trustee salaries.” More and more of the pledgers are conflating their for-profit investments with their philanthropy as well. And wherever large pools of money are allowed to accrue, outsize political influence follows.

The shell games played by billionaire philanthropists are nothing new. The most common of these are the two-step process by which the ultrarich make charitable donations to solve a problem that their for-profit work caused in the first place. It’s nice that the Massachusetts Institute of Technology’s Institute for Integrative Cancer Research exists, but it’s soured somewhat knowing that the $100 million gift David H. Koch seeded it with was born from a profitable enterprise that included the carcinogens sold by Koch subsidiary Georgia-Pacific. In similar fashion, Mark Zuckerberg’s Chan Zuckerberg Initiative “handed out over $3m in grants to aid the housing crisis in the Silicon Valley area,” a problem that, Guardian contributors Carl Rhodes and Peter Bloom note, Zuckerberg had no small part in causing in the first place.

And at the top of the plutocratic food chain, a billionaire’s charitable enterprise can become a philanthropic Death Star. This week, The Baffler’s Tim Schwab took a deep dive into the Bill and Melinda Gates Foundation and discovered that the entity essentially exists as a public relations stunt to justify Gates’s own staggering wealth. One noteworthy highlight involved Gates reaching out to his upper-crust lessers during the Covid pandemic, seeking additional money on top of the foundation’s own commitment, creating a revenue stream that could tie an ethicist into a knot. “During a global pandemic, when billions of people were having trouble with day-to-day expenses even in wealthy nations,” Schwab asks, “why would an obscenely wealthy private foundation start competing for charitable donations against food banks and emergency housing funds?”

As the IPS study notes, perhaps the worst aspect of all of this is that ordinary taxpayers essentially subsidize these endeavors: According to their report, “$73.34 billion in tax revenue was lost to the public in 2022 due to personal and corporate charitable deductions,” a number that goes up to $111 billion once you include what “little data we have about charitable bequests and the investments of charities themselves,” and balloons to several hundreds of billions of dollars each year “if we also include the capital gains revenue lost from the donation of appreciated assets.”

The IPS offers a number of ideas for reforming the world of billionaire philanthropy to better serve the public interest. There are changes to the current regime of private foundations and Donor Advised Funds that would ensure that money flows to worthy recipients with greater speed and transparency. Regulations could ensure that such organizations aren’t just another means by which billionaires shower favors on board members—and that would give foundation board members greater independence to act on their own ideas and prevent the organization from being used as one rich person’s influence-peddling machine. But for my money, the one way we could solve this problem is to institute one of the most popular policy positions in the history of the United States, and tax the rich to the hilt.

Naturally, the ultrarich put on a big show of generosity to temper your resolve to claw back their fortunes. Everywhere you look, their philanthropic endeavors thrive: They’re underwriting new academic buildings at the local university, providing the means by which your midsize city can have an orchestra, and furnishing the local hospital with state of the art equipment. And a sizable number of these deep-pocketed providers have banded together to create “The Giving Pledge,” a promise to give away half of their wealth during their lifetimes. It all sounds so pretty! But as a new report from the Institute for Policy Studies finds, these pledgers aren’t following through on their commitments—and the often self-serving nature of their philanthropy is actually making things worse for charitable organizations.

As the IPS notes, the business of being a billionaire—which suffered nary a hiccup during the pandemic—is booming. So one of the challenges that the Giving Pledgers face is that the rate at which they accrue wealth is making their promise harder to fulfill. The 73 pledgers “who were billionaires in 2010 saw their wealth grow by 138 percent, or 224 percent when adjusted for inflation, through 2022,” with combined assets ballooning from $348 billion to $828 billion.

According to the report, those who are making the effort to give aren’t handing their ducats over to normal charities. Instead, they are increasingly putting their money into intermediaries, such as private foundations or Donor Advised Funds, or DAFs. As the IPS notes, donations to “working charities appear to be declining” as foundations and DAFs become the preferred warehouses for philanthropic funds. (As TNR reported recently, DAFs are a favorite vehicle for anonymous donors to fund hate groups—while also pocketing a nice tax break.) This also has spurred some self-serving innovations among the philanthropic class, “such as taking out loans from their foundations or paying themselves hefty trustee salaries.” More and more of the pledgers are conflating their for-profit investments with their philanthropy as well. And wherever large pools of money are allowed to accrue, outsize political influence follows.

The shell games played by billionaire philanthropists are nothing new. The most common of these are the two-step process by which the ultrarich make charitable donations to solve a problem that their for-profit work caused in the first place. It’s nice that the Massachusetts Institute of Technology’s Institute for Integrative Cancer Research exists, but it’s soured somewhat knowing that the $100 million gift David H. Koch seeded it with was born from a profitable enterprise that included the carcinogens sold by Koch subsidiary Georgia-Pacific. In similar fashion, Mark Zuckerberg’s Chan Zuckerberg Initiative “handed out over $3m in grants to aid the housing crisis in the Silicon Valley area,” a problem that, Guardian contributors Carl Rhodes and Peter Bloom note, Zuckerberg had no small part in causing in the first place.

And at the top of the plutocratic food chain, a billionaire’s charitable enterprise can become a philanthropic Death Star. This week, The Baffler’s Tim Schwab took a deep dive into the Bill and Melinda Gates Foundation and discovered that the entity essentially exists as a public relations stunt to justify Gates’s own staggering wealth. One noteworthy highlight involved Gates reaching out to his upper-crust lessers during the Covid pandemic, seeking additional money on top of the foundation’s own commitment, creating a revenue stream that could tie an ethicist into a knot. “During a global pandemic, when billions of people were having trouble with day-to-day expenses even in wealthy nations,” Schwab asks, “why would an obscenely wealthy private foundation start competing for charitable donations against food banks and emergency housing funds?”

As the IPS study notes, perhaps the worst aspect of all of this is that ordinary taxpayers essentially subsidize these endeavors: According to their report, “$73.34 billion in tax revenue was lost to the public in 2022 due to personal and corporate charitable deductions,” a number that goes up to $111 billion once you include what “little data we have about charitable bequests and the investments of charities themselves,” and balloons to several hundreds of billions of dollars each year “if we also include the capital gains revenue lost from the donation of appreciated assets.”

The IPS offers a number of ideas for reforming the world of billionaire philanthropy to better serve the public interest. There are changes to the current regime of private foundations and Donor Advised Funds that would ensure that money flows to worthy recipients with greater speed and transparency. Regulations could ensure that such organizations aren’t just another means by which billionaires shower favors on board members—and that would give foundation board members greater independence to act on their own ideas and prevent the organization from being used as one rich person’s influence-peddling machine. But for my money, the one way we could solve this problem is to institute one of the most popular policy positions in the history of the United States, and tax the rich to the hilt.

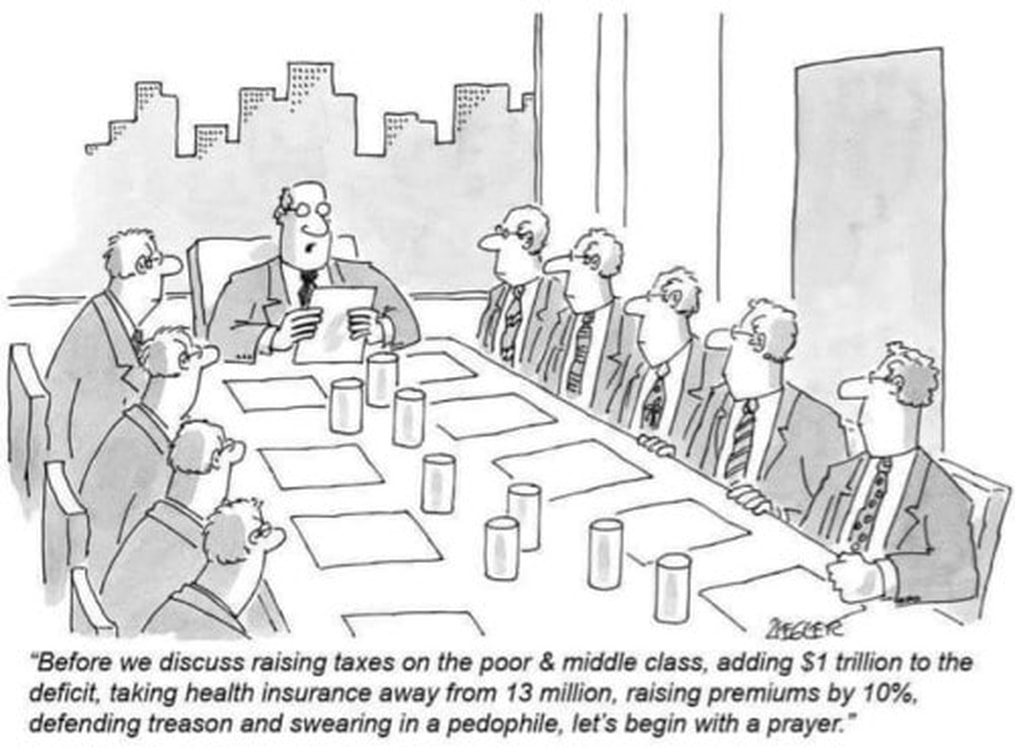

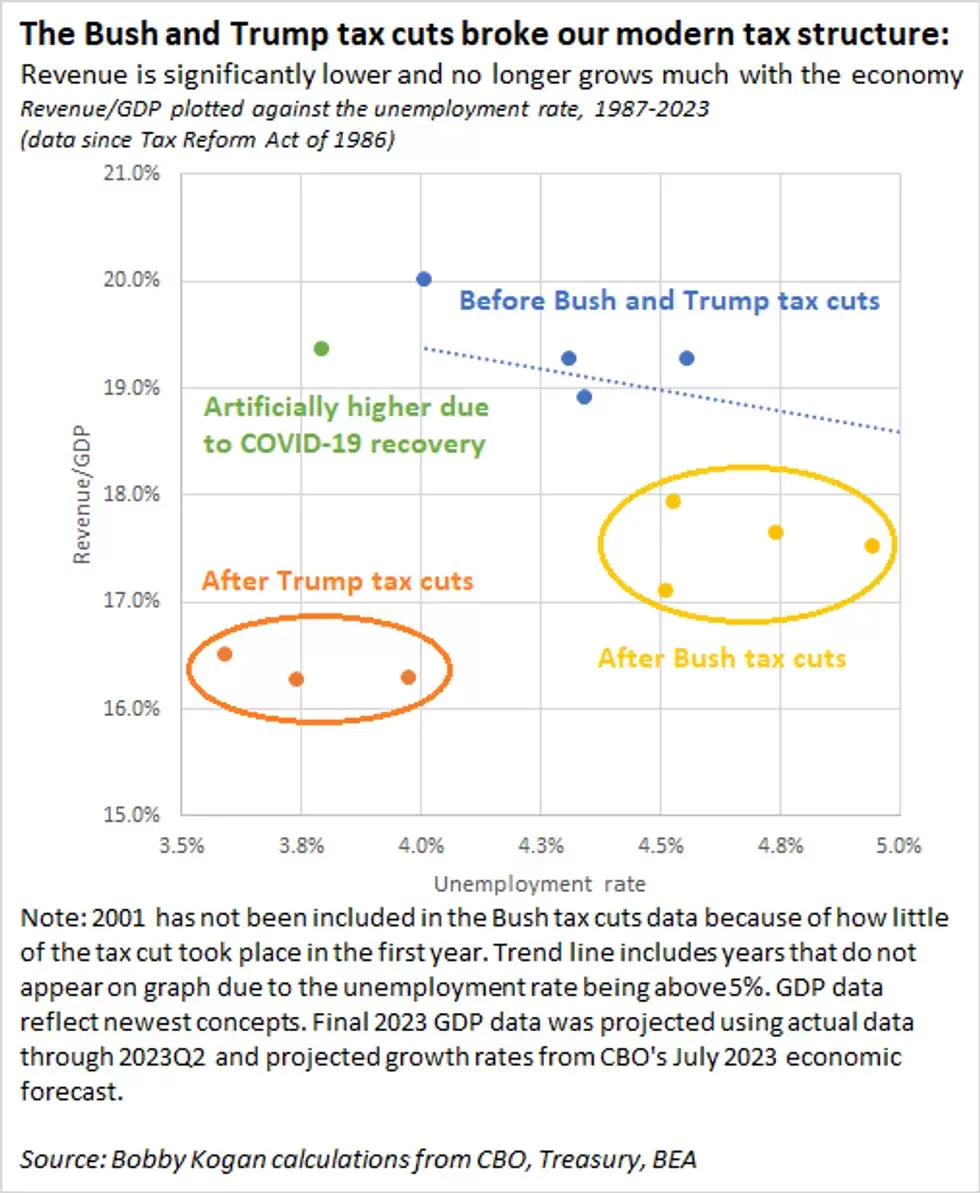

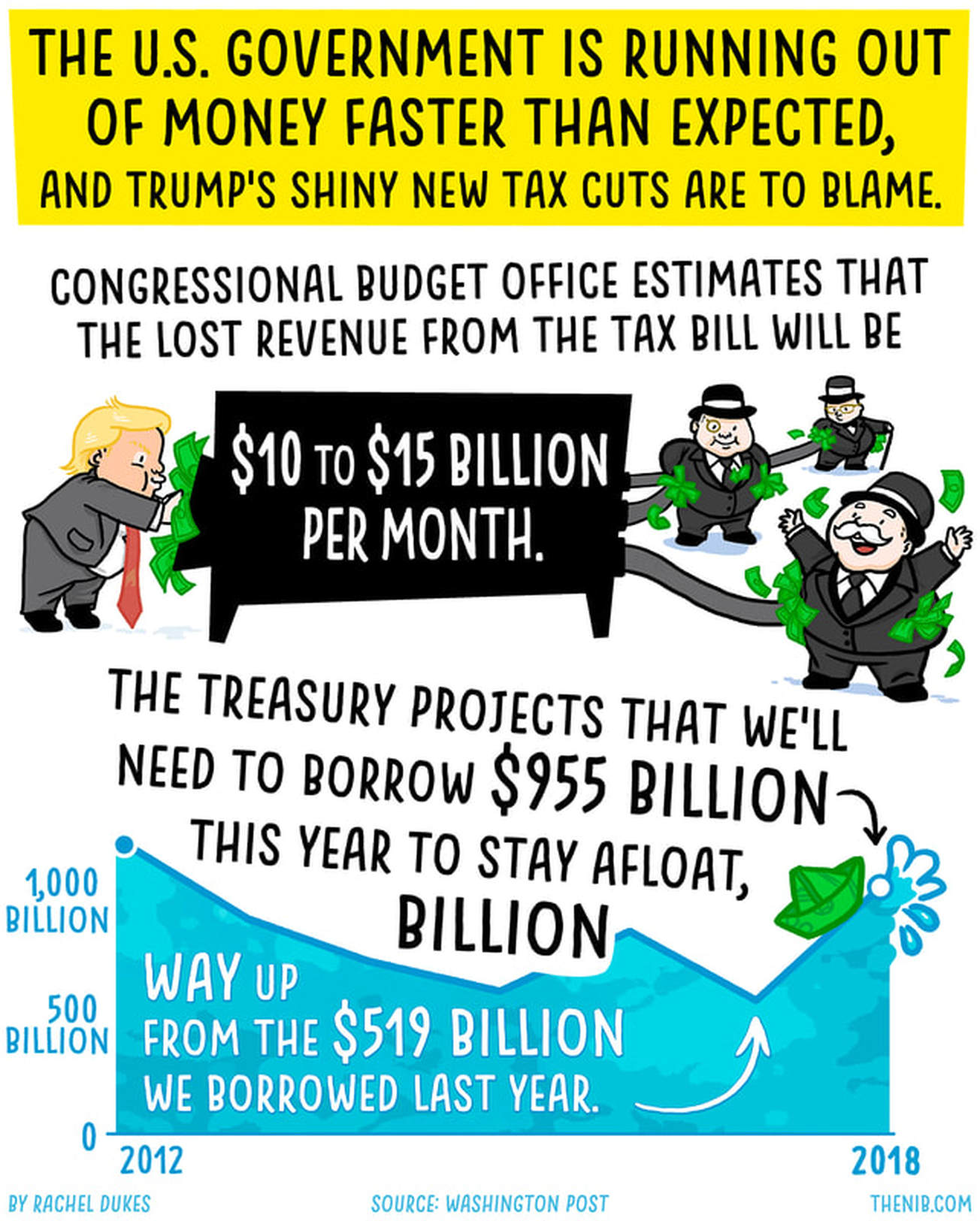

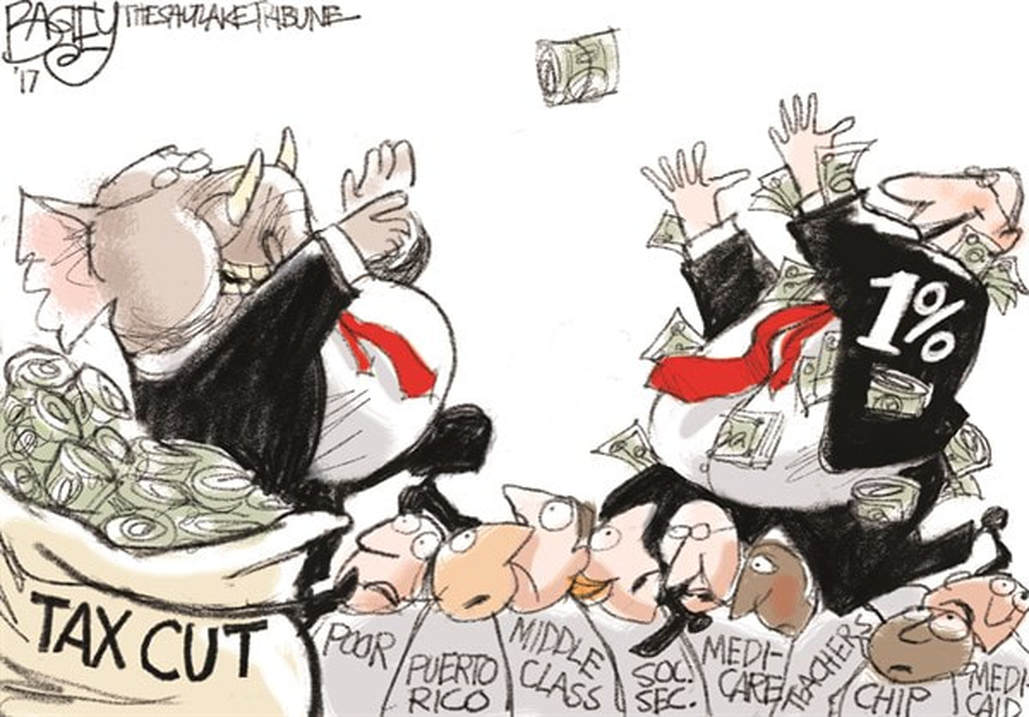

How Trump And Bush Tax Cuts For Billionaires Broke America

$10 Trillion in Added US Debt Since 2001 Shows 'Bush and Trump Tax Cuts Broke Our Modern Tax Structure

Jon Queally — crooks & liars

October 25, 2023

The U.S. Treasury Department on Friday released new figures related to the 2023 budget that showed a troubling drop in the nation's tax revenue compared to GDP—a measure which fell to 16.5% despite a growing economy—and an annual deficit increase that essentially doubled from the previous year.

"After record U.S. government spending in 2020 and 2021" due to programs related to the economic fallout from the Covid-19 crisis, the Washington Post reports, "the deficit dropped from close to $3 trillion to close to $1 trillion in 2022. But rather than continue to fall to its pre-pandemic levels, the deficit unexpectedly jumped this year to roughly $2 trillion."

While much of the reporting on the Treasury figures painted a picture of various and overlapping dynamics to explain the surge in the deficit—including higher payments on debt due to interest rates, tax filing waivers related to extreme weather events, the impact of a student loan forgiveness program that was later rescinded, or a dip in capital gains receipts—progressive tax experts say none of those complexities should act to shield what's at the heart of a budget that brings in less than it spends: tax giveaways to the rich.

Bobby Kogan, senior director for federal budget policy at the Center for American Progress, has argued repeatedly that growing deficits in recent years have a clear and singular chief cause: Republican tax cuts that benefit mostly the wealthy and profitable corporations.

In response to the Treasury figures released Friday, Kogan said that "roughly 75%" of the surge in the deficit and the debt ratio, the amount of federal debt relative to the overall size of the economy, was due to revenue decreases resulting from GOP-approved tax cuts over recent decades. "Of the remaining 25%," he said, "more than half" was higher interest payments on the debt related to Federal Reserve policy.

"We have a revenue problem, due to tax cuts," said Kogan, pointing to the major tax laws enacted under the administrations of George W. Bush and Donald Trump. "The Bush and Trump tax cuts broke our modern tax structure. Revenue is significantly lower and no longer grows much with the economy." And he offered this visualization about a growing debt ratio:

"The point I want to make again and again and again is that, relative to the last time CBO was projecting stable debt/GDP, spending is down, not up," Kogan said in a tweet Friday night. "It's lower revenue that's 100% responsible for the change in debt projections. If you take away nothing else, leave with this point."

---

In a detailed analysis produced in March, Kogan explained that, "If not for the Bush tax cuts and their extensions—as well as the Trump tax cuts—revenues would be on track to keep pace with spending indefinitely, and the debt ratio (debt as a percentage of the economy) would be declining. Instead, these tax cuts have added $10 trillion to the debt since their enactment and are responsible for 57 percent of the increase in the debt ratio since 2001, and more than 90 percent of the increase in the debt ratio if the one-time costs of bills responding to COVID-19 and the Great Recession are excluded."

On Friday, the office of Sen. Sheldon Whitehouse (D-R.I.) cited those same numbers in a press release responding to the Treasury's new report.

"Tax giveaways for the wealthy are continuing to starve the federal government of needed revenue: those passed by former Presidents Trump and Bush have added $10 trillion to the debt and account for 57 percent of the increase in the debt-to-GDP ratio since 2001," read the statement. "If not for those tax cuts, U.S. debt would be declining as a share of the economy."

Whitehouse, who chairs the Senate Budget Committee, said the dip in federal revenue and growth in the overall deficit both have the same primary cause: GOP fealty to the wealthy individuals and powerful corporations that bankroll their campaigns.

"In their blind loyalty to their mega-donors, Republicans' fixation on giant tax cuts for billionaires has created a revenue problem that is driving up our national debt," Whitehouse said Friday night. "Even as federal spending fell over the last year relative to the size of the economy, the deficit increased because Republicans have rigged the tax code so that big corporations and the wealthy can avoid paying their fair share."

Offering a solution, Whitehouse said, "Fixing our corrupted tax code and cracking down on wealthy tax cheats would help bring down the deficit. It would also ensure teachers and firefighters don't pay higher tax rates than billionaires, level the playing field for small businesses, and promote a stronger economy for all."

None of the latest figures—those showing that tax cuts have injured revenues and therefore spiked deficits and increased debt—should be a surprise.

In 2018, shortly after the Trump tax cuts were signed into law, a Congressional Budget Office (CBo) report predicted precisely this result: that revenues would plummet; annual deficits would grow; and not even the promise of economic growth made by Republicans to justify the giveaway would be enough to make up the difference in the budget.

"The CBO's latest report exposes the scam behind the rosy rhetoric from Republicans that their tax bill would pay for itself," Sen. Chuck Schumer (D-N.Y.), and now Senate Majority Leader, said at the time.

"Republicans racked up the national debt by giving tax breaks to their billionaire buddies, and now they want everyone else to pay for them."

In its 2018 report, the CBO predicted the deficit would rise to $804 billion by the end of that fiscal year. Now, for all the empty promises and howling from the GOP and their allied deficit hawks, the economic prescription they forced through Congress has resulted in an annual deficit of more than double that, all while demanding the nation's poorest and most vulnerable pay the price by demanding key social programs—including food aid, education budgets, unemployment benefits, and housing assistance—be slashed.

Meanwhile, the GOP majority in the U.S. House—with or without a Speaker currently holding the gavel—still has plans to extend the Trump tax cuts if given half a chance. In May, a CBO analysis of that pending legislation found that such an extension would add an additional $3.5 trillion to the national debt.

"Republicans racked up the national debt by giving tax breaks to their billionaire buddies, and now they want everyone else to pay for them," Sen. Whitehouse said at the time. "It is one of life's great enigmas that Republicans can keep a straight face while they simultaneously cite the deficit to extort massive spending cuts to critical programs and support a bill that would blow up deficits to extend trillions in tax cuts for the people who need them the least."

"After record U.S. government spending in 2020 and 2021" due to programs related to the economic fallout from the Covid-19 crisis, the Washington Post reports, "the deficit dropped from close to $3 trillion to close to $1 trillion in 2022. But rather than continue to fall to its pre-pandemic levels, the deficit unexpectedly jumped this year to roughly $2 trillion."

While much of the reporting on the Treasury figures painted a picture of various and overlapping dynamics to explain the surge in the deficit—including higher payments on debt due to interest rates, tax filing waivers related to extreme weather events, the impact of a student loan forgiveness program that was later rescinded, or a dip in capital gains receipts—progressive tax experts say none of those complexities should act to shield what's at the heart of a budget that brings in less than it spends: tax giveaways to the rich.

Bobby Kogan, senior director for federal budget policy at the Center for American Progress, has argued repeatedly that growing deficits in recent years have a clear and singular chief cause: Republican tax cuts that benefit mostly the wealthy and profitable corporations.

In response to the Treasury figures released Friday, Kogan said that "roughly 75%" of the surge in the deficit and the debt ratio, the amount of federal debt relative to the overall size of the economy, was due to revenue decreases resulting from GOP-approved tax cuts over recent decades. "Of the remaining 25%," he said, "more than half" was higher interest payments on the debt related to Federal Reserve policy.

"We have a revenue problem, due to tax cuts," said Kogan, pointing to the major tax laws enacted under the administrations of George W. Bush and Donald Trump. "The Bush and Trump tax cuts broke our modern tax structure. Revenue is significantly lower and no longer grows much with the economy." And he offered this visualization about a growing debt ratio:

"The point I want to make again and again and again is that, relative to the last time CBO was projecting stable debt/GDP, spending is down, not up," Kogan said in a tweet Friday night. "It's lower revenue that's 100% responsible for the change in debt projections. If you take away nothing else, leave with this point."

---

In a detailed analysis produced in March, Kogan explained that, "If not for the Bush tax cuts and their extensions—as well as the Trump tax cuts—revenues would be on track to keep pace with spending indefinitely, and the debt ratio (debt as a percentage of the economy) would be declining. Instead, these tax cuts have added $10 trillion to the debt since their enactment and are responsible for 57 percent of the increase in the debt ratio since 2001, and more than 90 percent of the increase in the debt ratio if the one-time costs of bills responding to COVID-19 and the Great Recession are excluded."

On Friday, the office of Sen. Sheldon Whitehouse (D-R.I.) cited those same numbers in a press release responding to the Treasury's new report.

"Tax giveaways for the wealthy are continuing to starve the federal government of needed revenue: those passed by former Presidents Trump and Bush have added $10 trillion to the debt and account for 57 percent of the increase in the debt-to-GDP ratio since 2001," read the statement. "If not for those tax cuts, U.S. debt would be declining as a share of the economy."

Whitehouse, who chairs the Senate Budget Committee, said the dip in federal revenue and growth in the overall deficit both have the same primary cause: GOP fealty to the wealthy individuals and powerful corporations that bankroll their campaigns.

"In their blind loyalty to their mega-donors, Republicans' fixation on giant tax cuts for billionaires has created a revenue problem that is driving up our national debt," Whitehouse said Friday night. "Even as federal spending fell over the last year relative to the size of the economy, the deficit increased because Republicans have rigged the tax code so that big corporations and the wealthy can avoid paying their fair share."

Offering a solution, Whitehouse said, "Fixing our corrupted tax code and cracking down on wealthy tax cheats would help bring down the deficit. It would also ensure teachers and firefighters don't pay higher tax rates than billionaires, level the playing field for small businesses, and promote a stronger economy for all."

None of the latest figures—those showing that tax cuts have injured revenues and therefore spiked deficits and increased debt—should be a surprise.

In 2018, shortly after the Trump tax cuts were signed into law, a Congressional Budget Office (CBo) report predicted precisely this result: that revenues would plummet; annual deficits would grow; and not even the promise of economic growth made by Republicans to justify the giveaway would be enough to make up the difference in the budget.

"The CBO's latest report exposes the scam behind the rosy rhetoric from Republicans that their tax bill would pay for itself," Sen. Chuck Schumer (D-N.Y.), and now Senate Majority Leader, said at the time.

"Republicans racked up the national debt by giving tax breaks to their billionaire buddies, and now they want everyone else to pay for them."

In its 2018 report, the CBO predicted the deficit would rise to $804 billion by the end of that fiscal year. Now, for all the empty promises and howling from the GOP and their allied deficit hawks, the economic prescription they forced through Congress has resulted in an annual deficit of more than double that, all while demanding the nation's poorest and most vulnerable pay the price by demanding key social programs—including food aid, education budgets, unemployment benefits, and housing assistance—be slashed.

Meanwhile, the GOP majority in the U.S. House—with or without a Speaker currently holding the gavel—still has plans to extend the Trump tax cuts if given half a chance. In May, a CBO analysis of that pending legislation found that such an extension would add an additional $3.5 trillion to the national debt.

"Republicans racked up the national debt by giving tax breaks to their billionaire buddies, and now they want everyone else to pay for them," Sen. Whitehouse said at the time. "It is one of life's great enigmas that Republicans can keep a straight face while they simultaneously cite the deficit to extort massive spending cuts to critical programs and support a bill that would blow up deficits to extend trillions in tax cuts for the people who need them the least."

From 1947 to 2023: Retracing the complex, tragic Israeli-Palestinian conflict

Agence France-Presse - raw story

October 11, 2023 11:31AM ET

The Israeli-Palestinian conflict was reignited once again on October 7 after a surprise offensive launched by Hamas against Israel. In retaliation, Israel ordered air strikes and a "complete siege" of the

1947: Thousands of European Jewish emigrants, many of them Holocaust survivors, board a ship – which came to be called Exodus 1947 – bound for then British-controlled Palestine. Heading for the “promised land”, they are intercepted by British naval ships and sent back to Europe. Widely covered by the media, the incident sparks international outrage and plays a critical role in convincing the UK that a UN-brokered solution is necessary to solve the Palestine crisis.

A UN special committee proposes a partition plan giving 56.47 percent of Palestine for a Jewish state and 44.53 percent for an Arab state. Palestinian representatives reject the plan, but their Jewish counterparts accept it.

On November 29, the UN General Assembly approves the plan, with 33 countries voting for partition, 13 voting against it and 10 abstentions.

1948-49: On May 14, David Ben-Gurion, Israel’s first prime minister, publicly reads the Proclamation of Independence. The declaration, which would go into effect the next day, comes a day ahead of the expiration of the British Mandate on Palestine. The Jewish state takes control of 77 percent of the territory of Mandate Palestine, according to the UN.

For Palestinians, this date marks the “Nakba”, the catastrophe that heralds their subsequent displacement and dispossession.

As hundreds of thousands of Palestinians, hearing word of massacres in villages such as Dir Yassin, flee towards Egypt, Lebanon, and Jordanian territory, the armies of Egypt, Syria, Lebanon, Jordan and Iraq attack Israel, launching the 1948 Arab-Israeli War.

The Arab armies are repelled, a ceasefire is declared and new borders – more favorable to Israel – are drawn. Jordan takes control of the West Bank and East Jerusalem while Egypt controls the Gaza Strip.

1956: The Second Arab-Israeli War, or the Suez Crisis, takes place after Egypt nationalizes the Suez Canal. In response Israel, the United Kingdom and France form an alliance and Israel occupies the Gaza Strip and the Sinai Peninsula. The Israeli army eventually withdraws its troops, under pressure from the US and the USSR.

1959: Yasser Arafat sets up the Palestinian organization Fatah in Gaza and Kuwait. It later becomes the main component of the Palestine Liberation Organisation (PLO).

1964: The PLO is created.

1967: The Third Arab-Israeli War, or the Six-Day War, between Israel and its Arab neighbors, results in a dramatic redrawing of the Middle East map. Israel seizes the West Bank and East Jerusalem, the Gaza Strip, the Sinai Peninsula and the Golan Heights.

1973: On October 6, during the Jewish holiday of Yom Kippur, Egyptian and Syrian armies launch offensives against Israel, marking the start of a new regional war. The Yom Kippur War, which ends 19 days later with Israel repelling the Arab armies, results in heavy casualties on all sides – at least several thousand deaths.

1979: An Israeli-Egyptian peace agreement is sealed in Washington following the Camp David Accords signed in 1978 by Egyptian President Anwar Sadat and Israeli Prime Minister Menachem Begin. According to the terms of this agreement, Egypt regains the Sinai Peninsula, which it had lost after the Six-Day War. Sadat becomes the first Arab leader to recognise the State of Israel.

1982: Under Defense Minister Ariel Sharon, Israeli troops storm into neighbouring Lebanon in a controversial military mission called “Operation Peace of Galilee”. The aim of the operation is to wipe out Palestinian guerrilla bases in southern Lebanon. But Israeli troops push all the way to the Lebanese capital of Beirut.

The subsequent routing of the PLO under Arafat leaves the Palestinian refugee camps in Lebanon essentially defenceless. From September 16 to 18, Lebanese Christian Phalangist militiamen – with ties to Israel – enter the camps of Sabra and Shatilla in Beirut, unleashing a brutal massacre that shocks the international community. The massacres, the subject of an Israeli inquiry popularly called the Kahane Commission, would subsequently cost Sharon his job as defence minister.

1987: Uprisings in Palestinian refugee camps in Gaza spread to the West Bank marking the start of the First Palestinian Intifada ("uprising" in Arabic). Nicknamed the "war of stones", the First Intifada lasts until 1993, costing more than 1,000 Palestinian lives. The image of the stone-throwing Palestinian demonstrators pitched against Israel’s military might comes to symbolise the Palestinian struggle.

It was also during this uprising that Hamas, influenced by the ideology of Egypt's Muslim Brotherhood, was born. From the outset, the Islamist movement favours armed struggle and rejects outright any legitimacy of an Israeli state.